Common Language

Computer are complex machines that require the near constant communication and synchronization to continue functioning. These systems consist of numerous modular parts, each containing their own components and core functions. From the procedure that powers on your computer to the process that controls electrons within a chip, standards define our global infrastructure.

Facilitating

ahttps://en.m.wikipedia.org/wiki/Lingua_franca

Acomputer linguanetwork, francamore (/ˌlɪŋɡwə ˈfræŋkə/; lit. 'Frankish tongue'; for plurals see § Usage notes), alsocommonly known as the Internet, requires a bridgegreat language,deal commonof language,planning. tradeWe language,need auxiliaryto language,ensure linkthat languagecomputers are created in a way that promote synergy and harmony – a method for ensuring parts can work together even when designed by competitors. Built upon this foundation, we need to reach an accord about how they would communicate.

As a collective, we convene to curate standards – or languagea ofmutually wideragreed communicationupon (LWC),definition isabout a system or how it operates. They are a languagelingua franca, systematicallyallowing usedus to makebridge communicationsystems possiblethrough betweencommon groupslanguage. of peopleThey whoset dothe notstage for collaboration and creation on a massive scale by ensuring our ability to share ainformation native language or dialect, particularly when it isacross a thirddivide.

Coming to an agreement on standards can be a contentious process that is distinctnever fromquite bothfinished. of As computers gained prominence, many corporations vied to create their own proprietary standards in an attempt to corner the speakers'market. native languages.[1]Computer parts created to one manufacturer's standards were not compatible with another's standards.

https://en.m.wikipedia.org/wiki/Protocol_Wars

Thein the 1960s, the Protocol Wars were a long-running debate in computer science that occurred from the 1970sbegan to thepolarize 1990s, when engineers, organizationsengineers and nations becameas polarizedwe overdebated how the issue of which communication protocolInternet would resulttake inshape. This required finding a robust and reliable way for computers to connect across vast distances.

Everyone from engineers to corporations explored their own ideas about how the bestInternet andshould mostwork. robust networks.It Thiswasn't culminated inuntil the Internet–OSI Standards War in the 1980s and early 1990s, which was ultimately "won" by the Internet protocol suite (TCP/IP) by the mid-1990s when it became the dominant protocol suite through rapid adoption of the Internet.

In the late 1960s and early 1970s, the pioneers of packet switching technology built computer networks providing data communication, that iswe thefirmly ability to transfer data between points or nodes. As more of these networks emerged in the mid to late 1970s, the debate about communication protocols became a "battle for access standards". An international collaboration between several national postal, telegraph and telephone (PTT) providers and commercial operators led to the X.25 standard in 1976, which was adoptedagreed on public data networks providing global coverage. Separately, proprietary data communication protocols emerged, most notably IBM's Systems Network Architecture in 1974 and Digital Equipment Corporation's DECnet in 1975.

https://en.m.wikipedia.org/wiki/Unix_wars

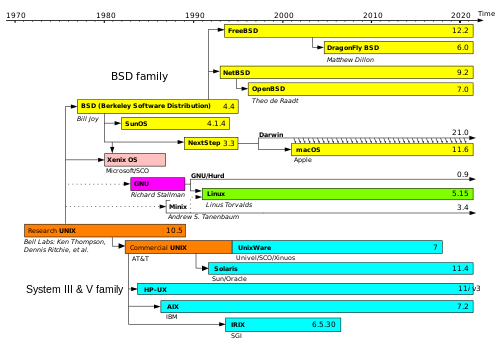

The Unix wars were struggles between vendors to set a standard – just in time for the Unix Wars. operating

We inwere learning how to create computers at the latesame 1980s and early 1990s.

In economics, vendor lock-in, also knownspeed as proprietarycorporations lock-inseeking orto customer lock‑in, makesgenerate a customerprofit dependentfrom onthem. a By creating divergent standards, consumers were often vendor for products, unablelocked-in to usetheir another vendor without substantial switching costsecosystem.

The useprevailing Internet standard was not created by any one corporation, but through a non-profit organization focused on the creation of open standardsstandards. and alternative options makes systems tolerantInstead of change,being owned by one party, they are freely available to anyone so that decisionsno one can be postponed until more information is available or unforeseen events are addressed. Vendor lock-in doesown the opposite: it makes it difficult to move from one solution to another.Internet.

https://en.m.wikipedia.org/wiki/Vendor_lock-in

Interoperability is a characteristic of a product or system to work with other products or systems.[1] While the term was initially defined for information technology or systems engineering services to allow for information exchange,[2] a broader definition takes into account social, political, and organizational factors that impact system-to-system performance.[3]

Interoperability:Protocols and standards allow devices and systems to communicate. These protocols ensure network components can function together, avoiding risks and security gaps produced by incompatible or unsupported systems.Security Baseline :Protocols and standards contain security principles and best practices that help secure network infrastructure. These protocols allow organizations to protect sensitive data viaencryption,authentication, and access controls.Vulnerability Management :Network security protocols and standards help organizations find and fix vulnerabilities. Many standards requires regular security assessments, vulnerability scanning, and penetration testing to discover network infrastructure flaws. Organizations can preventcyberattacksand address vulnerabilities by following these compliance criteria.

Standards

An open standard is a standard that is openly accessible and usable by anyone. It is also a common prerequisite that open standards use an open license that provides for extensibility. Typically, anybody can participate in their development due to their inherently open nature. There is no single definition, and interpretations vary with usage. Examples of open standards include the GSM, 4G, and 5G standards that allow most modern mobile phones to work world-wide.

Standards are the set of rules for data communication that are needed for the exchange of information among devices. It is important to follow Standards which are created by various Standard Organizations like IEEE, ISO, ANSI, etc.

- De Facto Standard: The meaning of the word " De Facto " is " By Fact " or "By Convention". These are the standards that have not been approved by any Organization but have been adopted as Standards because of their widespread use. Also, sometimes these standards are often established by Manufacturers.

For example : Apple and Google are two companies that established their own rules for their products which are different. Also, they use some same standard rules for manufacturing their products.

- De Jure Standard: The meaning of the word "De Jure" is "By Law" or "By Regulations".Thus, these are the standards that have been approved by officially recognized bodies like ANSI, ISO, IEEE, etc. These are the standards that are important to follow if it is required or needed.

For example : All the data communication standard protocols like SMTP, TCP, IP, UDP etc. are important to follow the same when we need them.

Standards that provide standard measurement, symbology or terminology are sometimes known as definition standards. These create a foundation on which many other standards can be created. The metric system is an example of a definition standard.

Interoperability is a characteristic of a product or system to work with other products or systems.[1] While the term was initially defined for information technology or systems engineering services to allow for information exchange,[2] a broader definition takes into account social, political, and organizational factors that impact system-to-system performance.[3]

- Interoperability: Protocols and standards allow devices and systems to communicate. These protocols ensure network components can function together, avoiding risks and security gaps produced by incompatible or unsupported systems.

- Security Baseline : Protocols and standards contain security principles and best practices that help secure network infrastructure. These protocols allow organizations to protect sensitive data via encryption, authentication, and access controls.

- Vulnerability Management : Network security protocols and standards help organizations find and fix vulnerabilities. Many standards requires regular security assessments, vulnerability scanning, and penetration testing to discover network infrastructure flaws. Organizations can prevent cyberattacks and address vulnerabilities by following these compliance criteria.

A Dictionary of Computer Science: 1. A publicly available definition of a hardware or software component, resulting from international, national, or industrial agreement.

Hardware

- Peripheral Component Interconnect (PCI) (a specification by Intel Corporation for plug-in boards to IBM-architecture PCs)

- Accelerated Graphics Port (AGP) (a specification by Intel Corporation for plug-in boards to IBM-architecture PCs)

- PCI Industrial Computer Manufacturers Group (PICMG) (an industry consortium developing Open Standards specifications for computer architectures )

- Synchronous dynamic random-access memory (SDRAM) and its DDR SDRAM variants (by JEDEC Solid State Technology Association)

- Universal Serial Bus (USB) (by USB Implementers Forum)

- Internet Protocol (IP) (a specification of the IETF for transmitting packets of data on a network – specifically, IETF RFC 791

- MQTT (Message Queuing Telemetry Transport) is a lightweight, publish-subscribe network protocol that transports messages between devices.

- Transmission Control Protocol (TCP) (a specification of the IETF for implementing streams of data on top of IP – specifically, IETF RFC 793)

https://en.m.wikipedia.org/wiki/Internet_Standard

In computer network engineering, an Internet Standard is a normative specification of a technology or methodology applicable to the Internet. Internet Standards are created and published by the Internet Engineering Task Force (IETF). They allow interoperation of hardware and software from different sources which allows internets to function.[1] As the Internet became global, Internet Standards became the lingua franca of worldwide communications.[2]

https://en.m.wikipedia.org/wiki/Open_standard

Computer hardware and software standards are technical standards instituted for compatibility and interoperability between software, systems, platforms and devices.

https://en.m.wikipedia.org/wiki/Technical_standard

A technical standard is an established norm or requirement for a repeatable technical task which is applied to a common and repeated use of rules, conditions, guidelines or characteristics for products or related processes and production methods, and related management systems practices. A technical standard includes definition of terms; classification of components; delineation of procedures; specification of dimensions, materials, performance, designs, or operations; measurement of quality and quantity in describing materials, processes, products, systems, services, or practices; test methods and sampling procedures; or descriptions of fit and measurements of size or strength.[1]

https://en.m.wikipedia.org/wiki/De_facto_standard

A de facto standard is a custom or convention that is commonly used even though its use is not required.

De facto is a Latin phrase (literally "of fact"), here meaning "in practice but not necessarily ordained by law" or "in practice or actuality, but not officially established".

- PCI Express electrical and mechanical interface, and interconnect protocol used in computers, servers, and industrial applications.

- HDMI, Display Port, VGA for video, RS-232 for low bandwidth serial communication.

- USB for high speed serial interface in computers and for powering or charging low power external devices (like mobile phones, headphones, portable hard drives) usually using micro USB plug and socket.

- 3.5 inch and 2.5 inch hard drives.

- ATX motherboard, back plane, and power standards

https://en.m.wikipedia.org/wiki/Harmonization_(standards)

Harmonization is the process of minimizing redundant or conflicting standards which may have evolved independently.[1][2] The name is also an analogy to the process to harmonizing discordant music.

Harmonization is different from standardization. Harmonization involves a reduction in variation of standards, while standardization entails moving towards the eradication of any variation with the adoption of a single standard.[3] The goal for standard harmonization is to find commonalities, identify critical requirements that need to be retained, and provide a common framework for standards setting organizations (SSO) to adopt. In some instances, businesses come together forming alliances or coalitions,[4] also referred to multi-stakeholder initiatives (MSI) with a belief that harmonization could reduce compliance costs and simplify the process of meeting requirements. With potential to reduce complexity for those tasked with testing and auditing standards for compliance.

Private standards typically require a financial contribution in terms of an annual fee from the organizations who adopt the standard. Corporations are encouraged to join the board of governance of the standard owner[24] which enables reciprocity. Meaning corporations have permission to exert influence over the requirements in the standard, and in return the same corporations promote the standards in their supply chains which generates revenue and profit for the standard owner. Financial incentives with private standards can result in a perverse incentive, where some private standards are created solely with the intent of generating money. BRCGS, as scheme owner of private standards, was acquired in 2016 by LGC Ltd who were owned by private equity company Kohlberg Kravis Roberts.[25] This acquisition triggered substantial increases in BRCGS annual fees.[26] In 2019, LGC Ltd was sold to private equity companies Cinven and Astorg.[27]

https://en.m.wikipedia.org/wiki/Software_standard

A software standard is a standard, protocol, or other common format of a document, file, or data transfer accepted and used by one or more software developers while working on one or more than one computer programs. Software standards enable interoperability between different programs created by different developers.

Representatives from standards organizations, like W3C[4] and ISOC,[5] collaborate on how to make a unified software standard to ensure seamless communication between software applications. These organisations consist of groups of larger software companies like Microsoft and Apple Inc.

The complexity of a standard varies based on the specific problem it aims to address but it needs to remain simple, maintainable and understandable. The standard document must comprehensively outline various conditions, types, and elements to ensure practicality and fulfill its intended purpose. For instance, although both FTP (File Transfer Protocol) and SMTP (Simple Mail Transfer Protocol) facilitate computer-to-computer communication, FTP specifically handles the exchange of files, while SMTP focuses on the transmission of emails.

A standard can be a closed standard or an open standard. The documentation for an open standard is open to the public and anyone can create a software that implements and uses the standard. The documentation and specification for closed standards are not available to the public, enabling its developer to sell and license the code to manage their data format to other interested software developers. While this process increases the revenue potential for a useful file format, it may limit acceptance and drive the adoption of a similar, open standard instead.[6]

Backwards compatibility

In telecommunications and computing, backward compatibility (or backwards compatibility) is a property of an operating system, software, real-world product, or technology that allows for interoperability with an older legacy system, or with input designed for such a system.

Specifications

https://en.m.wikipedia.org/wiki/Open_specifications

An open specification is a specification created and controlled, in an open process, by an association or a standardization body intending to achieve interoperability and interchangeability. An open specification is not controlled by a single company or individual or by a group with discriminatory membership criteria. Copies of Open Specifications are available free of charge or for a moderate fee and can be implemented under reasonable and non-discriminatory licensing (RAND) terms by all interested parties.

A specification often refers to a set of documented requirements to be satisfied by a material, design, product, or service.[1] A specification is often a type of technical standard.

There are different types of technical or engineering specifications (specs), and the term is used differently in different technical contexts. They often refer to particular documents, and/or particular information within them. The word specification is broadly defined as "to state explicitly or in detail" or "to be specific".

A requirement specification is a documented requirement, or set of documented requirements, to be satisfied by a given material, design, product, service, etc.[1] It is a common early part of engineering design and product development processes in many fields.

A design or product specification describes the features of the solutions for the Requirement Specification, referring to either a designed solution or final produced solution. It is often used to guide fabrication/production. Sometimes the term specification is here used in connection with a data sheet (or spec sheet), which may be confusing. A data sheet describes the technical characteristics of an item or product, often published by a manufacturer to help people choose or use the products. A data sheet is not a technical specification in the sense of informing how to produce.

Specifications are a type of technical standard that may be developed by any of various kinds of organizations, in both the public and private sectors. Example organization types include a corporation, a consortium (a small group of corporations), a trade association (an industry-wide group of corporations), a national government (including its different public entities, regulatory agencies, and national laboratories and institutes), a professional association (society), a purpose-made standards organization such as ISO, or vendor-neutral developed generic requirements. It is common for one organization to refer to (reference, call out, cite) the standards of another. Voluntary standards may become mandatory if adopted by a government or business contract.

https://en.m.wikipedia.org/wiki/Single_UNIX_Specification

The Single UNIX Specification (SUS) is a standard for computer operating systems,[1][2] compliance with which is required to qualify for using the "UNIX" trademark. The standard specifies programming interfaces for the C language, a command-line shell, and user commands. The core specifications of the SUS known as Base Specifications are developed and maintained by the Austin Group, which is a joint working group of IEEE, ISO/IEC JTC 1/SC 22/WG 15 and The Open Group. If an operating system is submitted to The Open Group for certification and passes conformance tests, then it is deemed to be compliant with a UNIX standard such as UNIX 98 or UNIX 03.

Very few BSD and Linux-based operating systems are submitted for compliance with the Single UNIX Specification, although system developers generally aim for compliance with POSIX standards, which form the core of the Single UNIX Specification.

Specification need

In many contexts, particularly software, specifications are needed to avoid errors due to lack of compatibility, for instance, in interoperability issues.

For instance, when two applications share Unicode data, but use different normal forms or use them incorrectly, in an incompatible way or without sharing a minimum set of interoperability specification, errors and data loss can result. For example, Mac OS X has many components that prefer or require only decomposed characters (thus decomposed-only Unicode encoded with UTF-8 is also known as "UTF8-MAC"). In one specific instance, the combination of OS X errors handling composed characters, and the samba file- and printer-sharing software (which replaces decomposed letters with composed ones when copying file names), has led to confusing and data-destroying interoperability problems.[33][34]

Protocols

https://en.m.wikipedia.org/wiki/Communication_protocol

A communication protocol is a system of rules that allows two or more entities of a communications system to transmit information via any variation of a physical quantity. The protocol defines the rules, syntax, semantics, and synchronization of communication and possible error recovery methods. Protocols may be implemented by hardware, software, or a combination of both.[1]

Communicating systems use well-defined formats for exchanging various messages. Each message has an exact meaning intended to elicit a response from a range of possible responses predetermined for that particular situation. The specified behavior is typically independent of how it is to be implemented. Communication protocols have to be agreed upon by the parties involved.[2] To reach an agreement, a protocol may be developed into a technical standard. A programming language describes the same for computations, so there is a close analogy between protocols and programming languages: protocols are to communication what programming languages are to computations.[3] An alternate formulation states that protocols are to communication what algorithms are to computation.[4]

Multiple protocols often describe different aspects of a single communication. A group of protocols designed to work together is known as a protocol suite; when implemented in software they are a protocol stack.

Internet communication protocols are published by the Internet Engineering Task Force (IETF). The IEEE (Institute of Electrical and Electronics Engineers) handles wired and wireless networking and the International Organization for Standardization (ISO) handles other types. The ITU-T handles telecommunications protocols and formats for the public switched telephone network (PSTN). As the PSTN and Internet converge, the standards are also being driven towards convergence.

A protocol is a set of rules that determines how data is sent and received over a network. The protocol is just like a language that computers use to talk to each other, ensuring they understand and can respond to each other's messages correctly. Protocols help make sure that data moves smoothly and securely between devices on a network.

To make communication successful between devices, some rules and procedures should be agreed upon at the sending and receiving ends of the system. Such rules and procedures are called Protocols. Different types of protocols are used for different types of communication.

- Syntax: Syntax refers to the structure or the format of the data that gets exchanged between the devices. Syntax of the message includes the type of data, composition of the message, and sequencing of the message. The starting 8 bits of data are considered as the address of the sender. The next 8 bits are considered to be the address of the receiver. The remaining bits are considered as the message itself.

- Semantics: Semantics defines data transmitted between devices. It provides rules and norms for understanding message or data element values and actions.

- Timing: Timing refers to the synchronization and coordination between devices while transferring the data. Timing ensures at what time data should be sent and how fast data can be sent. For example, If a sender sends 100 Mbps but the receiver can only handle 1 Mbps, the receiver will overflow and lose data. Timing ensures preventing of data loss, collisions, and other timing-related issues.

- Sequence Control: Sequence control ensures the proper ordering of data packets. The main responsibility of sequence control is to acknowledge the data while it get received, and the retransmission of lost data. Through this mechanism, the data is delivered in correct order.

- Flow Control: Flow control regulates device data delivery. It limits the sender's data or asks the receiver if it's ready for more. Flow control prevents data congestion and loss.

- Error Control: Error control mechanisms detect and fix data transmission faults. They include error detection codes, data resend, and error recovery. Error control detects and corrects noise, interference, and other problems to maintain data integrity.

- Security : Network security protects data confidentiality, integrity, and authenticity. which includes encryption, authentication, access control, and other security procedures. Network communication's privacy and trustworthiness are protected by security standards.

Interface

https://en.m.wikipedia.org/wiki/Interface_(computing)

In computing, an interface (American English) or interphase (British English, archaic) is a shared boundary across which two or more separate components of a computer system exchange information. The exchange can be between software, computer hardware, peripheral devices, humans, and combinations of these.[1] Some computer hardware devices, such as a touchscreen, can both send and receive data through the interface, while others such as a mouse or microphone may only provide an interface to send data to a given system.[2]

https://en.m.wikipedia.org/wiki/Hardware_interface

Hardware interfaces exist in many components, such as the various buses, storage devices, other I/O devices, etc. A hardware interface is described by the mechanical, electrical, and logical signals at the interface and the protocol for sequencing them (sometimes called signaling).[3] A standard interface, such as SCSI, decouples the design and introduction of computing hardware, such as I/O devices, from the design and introduction of other components of a computing system, thereby allowing users and manufacturers great flexibility in the implementation of computing systems.[3] Hardware interfaces can be parallel with several electrical connections carrying parts of the data simultaneously or serial where data are sent one bit at a time.[4]

https://en.m.wikipedia.org/wiki/Application_binary_interface

https://en.m.wikipedia.org/wiki/Application_programming_interface

A software interface may refer to a wide range of different types of interfaces at different "levels". For example, an operating system may interface with pieces of hardware. Applications or programs running on the operating system may need to interact via data streams, filters, and pipelines.[5] In object oriented programs, objects within an application may need to interact via methods.[6]

In practice

A key principle of design is to prohibit access to all resources by default, allowing access only through well-defined entry points, i.e., interfaces.[7] Software interfaces provide access to computer resources (such as memory, CPU, storage, etc.) of the underlying computer system; direct access (i.e., not through well-designed interfaces) to such resources by software can have major ramifications—sometimes disastrous ones—for functionality and stability.[citation needed]

The Difference

In essence, a standard is a guideline for achieving uniformity, efficiency, and quality in products, processes, or services. A protocol is a set of rules that govern how data is transmitted and received, facilitating communication between systems. A specification details the requirements, dimensions, and materials for a specific product or process.

Protocols define how data is sent, received, and processed, while standards ensure that various technologies are compatible with each other. This coordination is critical for the Internet and other networks to function constantly and efficiently.

Standard:

Purpose:

Standards ensure consistency and interoperability, often aiming for quality, safety, and efficiency.

Scope:

Standards can cover a wide range of areas, from product dimensions and performance to processes and safety procedures.

Example:

IEEE 802.3 (Ethernet) standard defines the physical and data link layers for wired networks.

Key Characteristics:

Standards are often formalized and widely accepted, sometimes mandated by legal requirements or industry best practices.

Protocol:

Purpose:

Protocols are the rules that govern how information is exchanged, ensuring that different systems can communicate effectively.

Scope:

Protocols primarily deal with communication, covering aspects like data formatting, error checking, and message sequencing.

Example:

TCP/IP (Transmission Control Protocol/Internet Protocol) is a fundamental suite of protocols that governs how data is transmitted over the internet.

Key Characteristics:

Protocols are often implemented in software and hardware and are essential for network communication.

Specification:

Purpose:

Specifications detail the requirements and characteristics of a product, service, or process.

Scope:

Specifications can be very detailed, outlining everything from dimensions and materials to performance criteria and testing procedures.

Example:

A technical specification for a computer component might include its dimensions, power requirements, and operating temperature range.

Key Characteristics:

Specifications are often used in contracts and are crucial for ensuring that products and services meet the required standards.

Relationship:

Standards often include specifications. For example, a standard for a brick might include specifications for its dimensions and materials.

Protocols are often defined by specifications. For instance, a communication protocol standard might have a specification document outlining the rules and formats for message exchange.

Standards can also influence the development of protocols and specifications.

In essence, standards provide the overall framework, specifications detail the requirements within that framework, and protocols define the rules for communication within that framework.