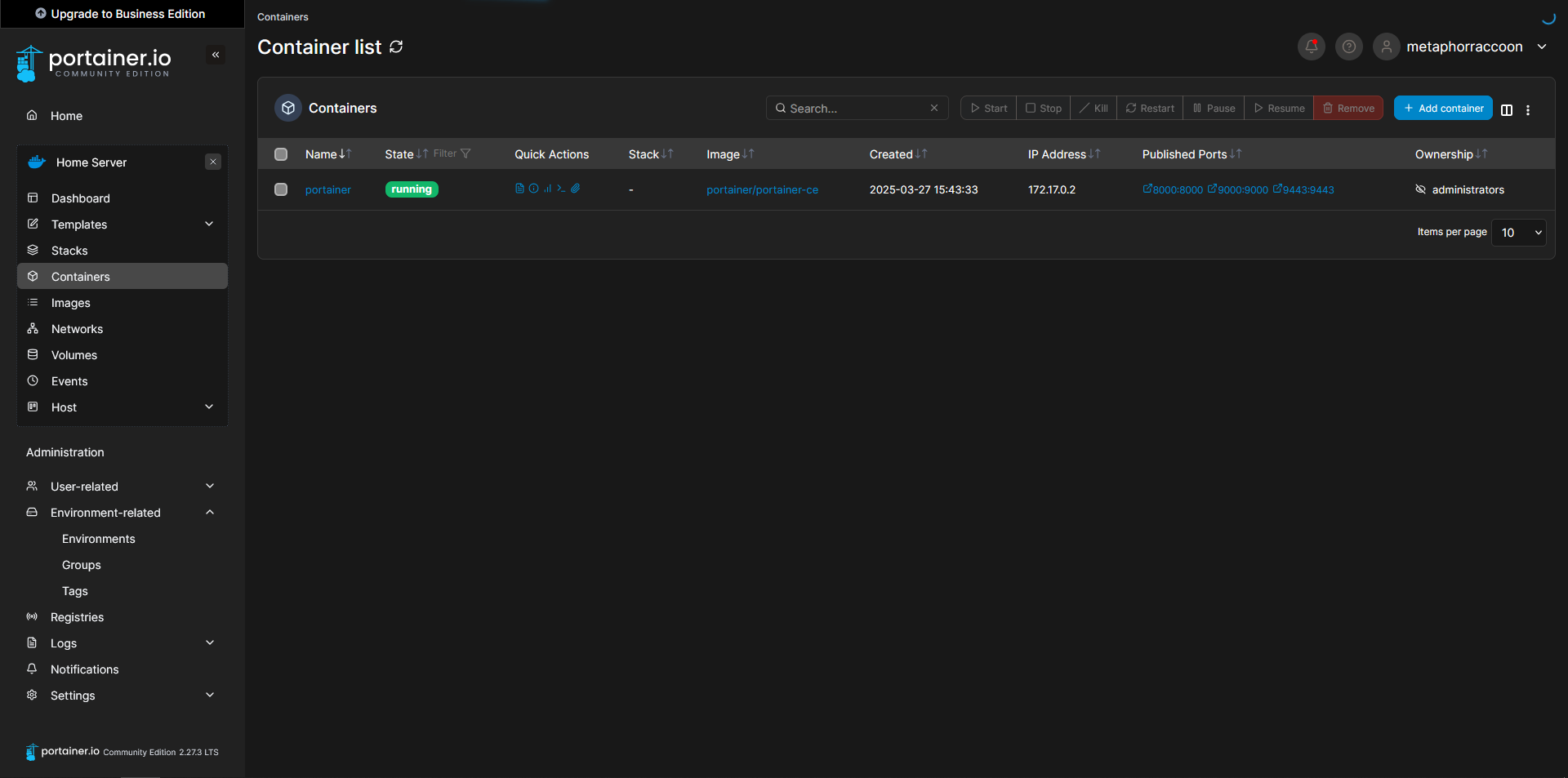

What is Docker?

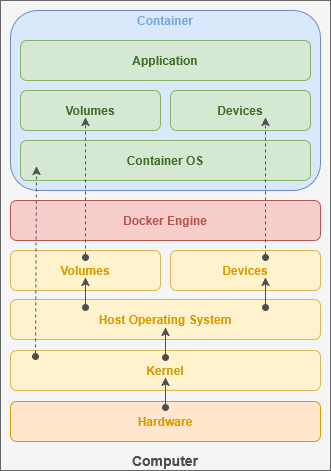

This software service runs on top of the operating system to creates "virtual containers", each with their own small operating system running on top of the Linux kernel. While we can cut open ports into the container to transmit data, it otherwise operates cut-off from the host system.

This is a similar to the technology companies use for hosting their web-based "cloud" services. Containers allow us to quickly deploy software in secure, isolated environments. As you need to host most traffic, Docker makes it simple to set up multiple servers all running the same services and balance the user load between these independent systems.

Docker Engine

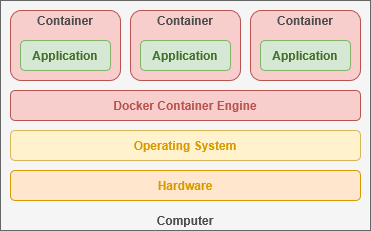

Docker interfaces directly with the Linux kernel to access the drivers that communicate with your computer's hardware. This enables software to be deployed on another server regardless of the underlying hardware used.

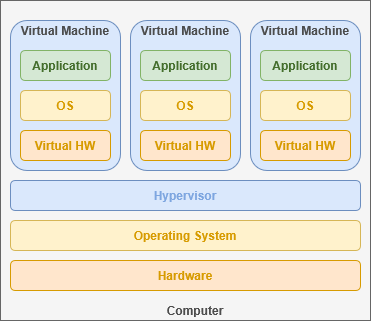

The mechanism that virtual containers employ is fundamentally different than a virtual machine, but they perform a similar function. Virtual machines use a "hypervisor" to emulate the hardware necessary to run its own "guest" operating system. This happens under the supervision of your "host" operating system and incurs a great deal of computational overhead.

Containers share their host operating system's kernel and directly utilize the existing hardware infrastructure. This allows containers to emulate only the minimal operating system required to support their software.

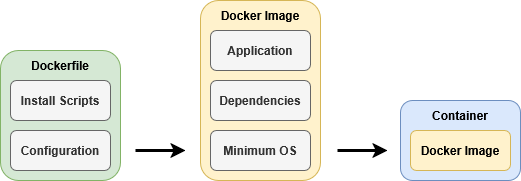

Developers build a 'container image' that contain the complete operating system required for the application. Alpine Linux is the foundation of many Docker containers, requiring only 5mb of storage space. These images act as a template to quickly create a containerized operating system that can interface with your hardware through your host operating system. Since Docker has access to the kernel, it can share access to devices and files to individual containers.

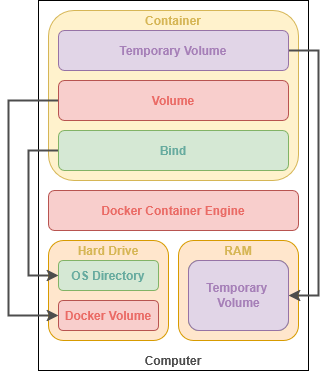

Docker Images are "read-only" and the files of the image cannot be changed. Any changes you make will be reset once the container is restarted. This makes it extremely easy to update services that have been optimized for Docker. All you need to do is download the latest Docker image and re-start the container using it.

In order to keep data in between power cycles, we need to designate storage space for the container. Docker can be used to: create virtual disk drives tied to the container that can be quickly deleted when the container is unused; mount a directory from our host computer inside the container; or create a temporary filesystem in memory that is deleted when the container is stopped.

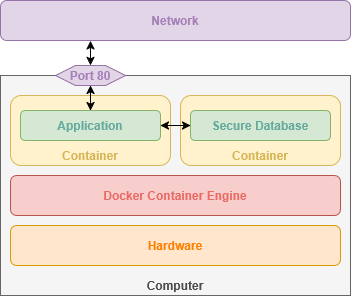

When creating a container, we can open access to network ports that allow communication with the service you are hosting. For many self-hosted cloud services, this includes a port for the browser-based graphical user interface. These ports can also enable communication between multiple containers – such as an application and it's database.

This can increase security by allowing your services to communicate behind-the-scenes, inaccessible to access from outside your local computer. Some services – like qBittorrent – use ports as a way to communicate with the outside internet through your network router.

Containers can be controlled like a system service, allowing us to easily start, stop or restart them. Updating software is easy because everything used by the application is stored within the container image.

Docker containers are controlled primarily through the terminal. You can run a docker container from the terminal with one command.

sudo docker run --it -d -p 80:80 --name nginx -v /srv/nginx/:/config scr.io/linuxserver/nginx:latestThis command has several important parameters that define how our container is created and then functions. This command follow the basic syntax:

sudo [[program]] [[command]] [[parameters]]Running 'sudo' tells the shell to run the command as Root – or 'super user do'. We are executing the 'docker' program to 'run' a container with the following parameters:

| --it | Keeps the container's shell accessible through the terminal |

| -d | Runs container in the background |

| -p |

Opens a port on the container, connecting a port from the container to an external port on our host computer. This allows the service to be accessible by other computers on your network. |

| --name | Name to use for the container |

| -v | Links a directory or file from our host computer to the container so it can access it. |

| scr.io/linuxserver/nginx:latest | The Docker image to use for creating the container |

This is the basic syntax for creating any Docker container. We can check the status of running Docker containers by entering the command:

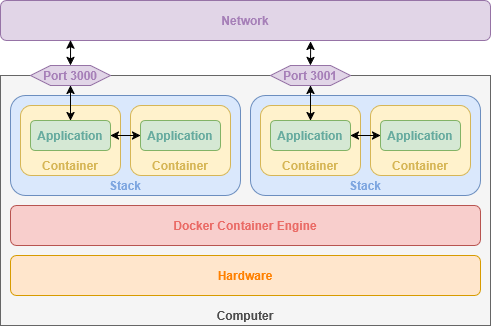

sudo docker psTalk about ports versus interactive terminal. Talk about stacks. Setting up multiple services easily with one port exposed that allows databases to communicate within a secure ecosystem. Show locked icon on individual stacks.

Docker Compose

This Docker Engine add-on that allows you to define and create new containers as well as the virtual networks connecting them. This makes it very easy to quickly pop-up containers using an easy-to-read markup language. Docker Compose can also help improve application security by automatically defining private networks where an application can securely access other services behind-the-scenes.

services:

nginx:

image: lscr.io/linuxserver/nginx:latest

container_name: nginx

volumes:

- /srv/nginx/:/config

ports:

- 80:80This Docker Compose snippet creates the same container as our example above. YAML follows a well-defined syntax that focuses on human readability. Portainer, a web application we will be installing, can use Docker Compose to quickly host services using our browser and a graphical interface.