What is Linux?

When you purchase a MacBook, it comes with MacOS pre-installed on it. This operating system is where you browse the Internet, message friends and run your favorite applications. This closely guarded software uses proprietary code created by Apple and each year they release a new version superseding the last. This cycle focuses on using software to leverage new features in updated hardware.

When talking about "Linux", we are not explicitly referring to any one operating system – like MacOS or Windows. Linux is collective effort of countless development communities from around the globe working together and release their source code for everyone to see. These projects create modular components that conglomerate into larger systems.

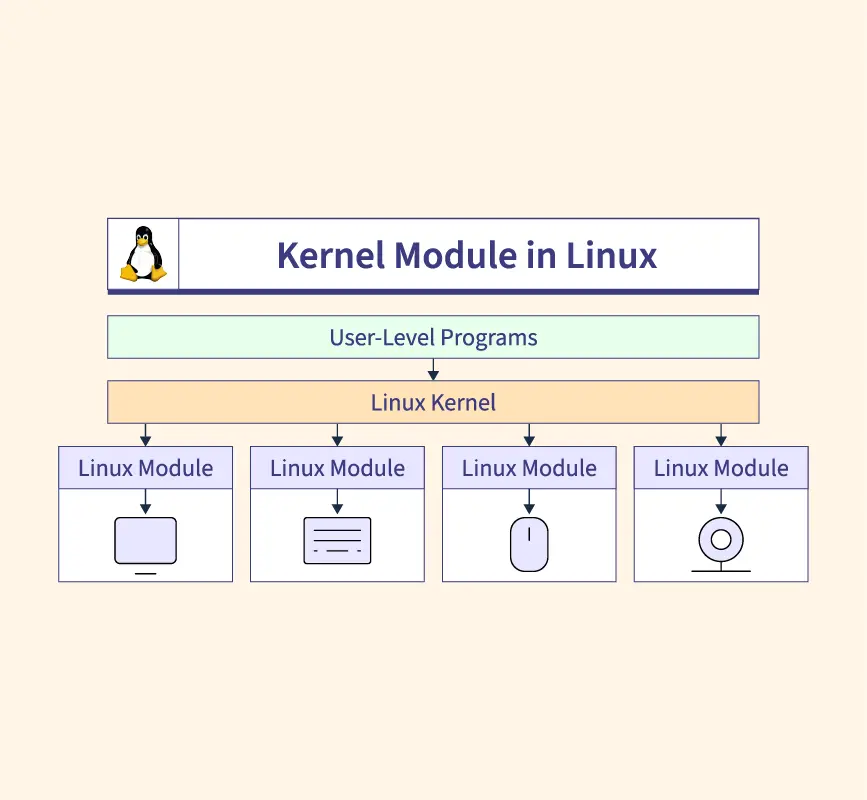

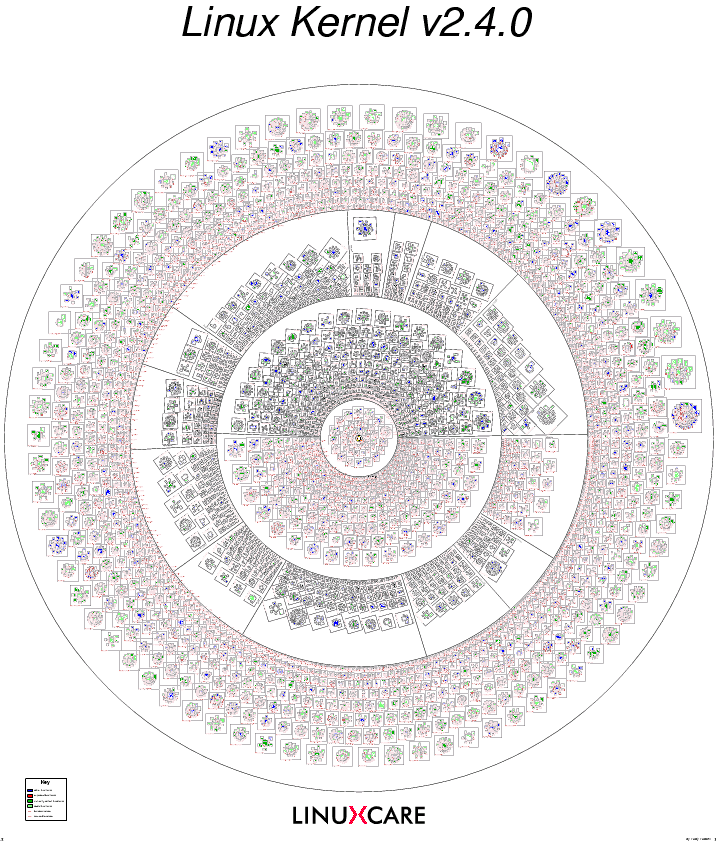

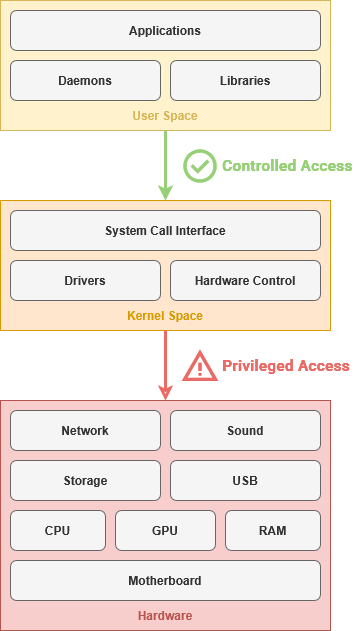

These systems revolve around the Linux kernel – a core piece of software that has complete control over all hardware and software within a computer. At 40-million lines of code, this monolithic software project has contributions from over 13,000 developers and 1,300 companies around the globe.

These systems revolve around the Linux kernel – a core piece of software that has complete control over all hardware and software within a computer. At 40-million lines of code, this monolithic software project has contributions from over 13,000 developers and 1,300 companies around the globe.

|

|

|

|

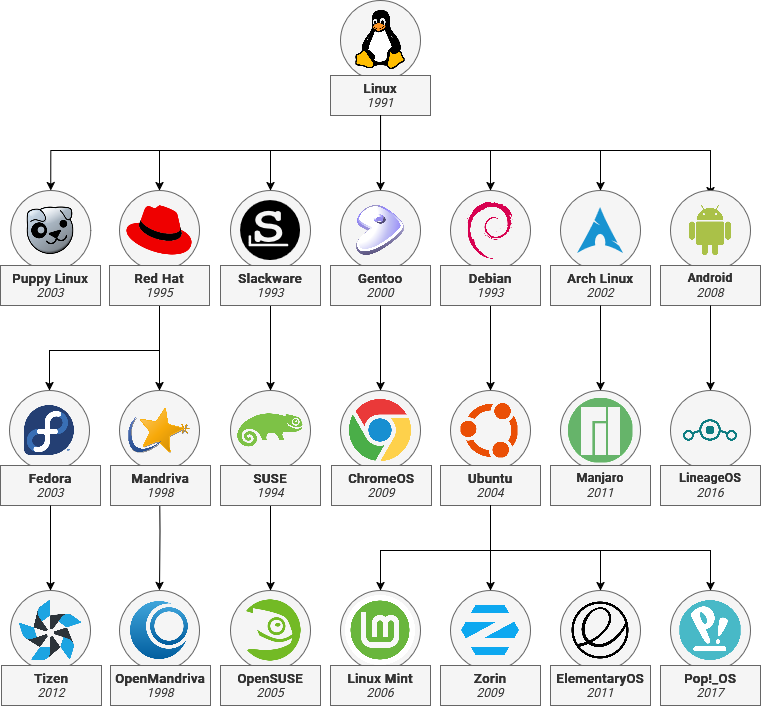

The Kernel is packaged alongside software created by other open-source developers into a 'distro' – or a distribution. Entire Linux branches can derive from other distros by mixing and matching components to create a family tree. Debian is the root of Ubuntu which is used in turn for Raspberry Pi OS, ElementaryOS, Linux Mint and many others.

Much of our modern world is powered through open-source software projects. Linux is an example of the immense scale of the open-source projects used to power the majority of the cloud and Internet servers. Openssl, an open-source project which provides secure encryption to over two-thirds of the internet, illustrates their dire importance.

Components

The Linux operating system comes with several core components. These parts works together to get the hardware initialized so that software can be loaded on it and made available for users. For each component, there are often multiple software options to achieve the same outcome so you can choose what

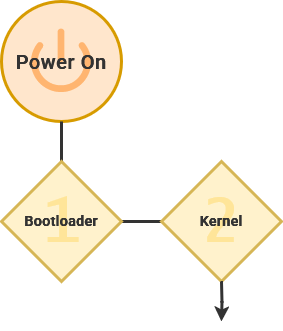

Bootstrapping

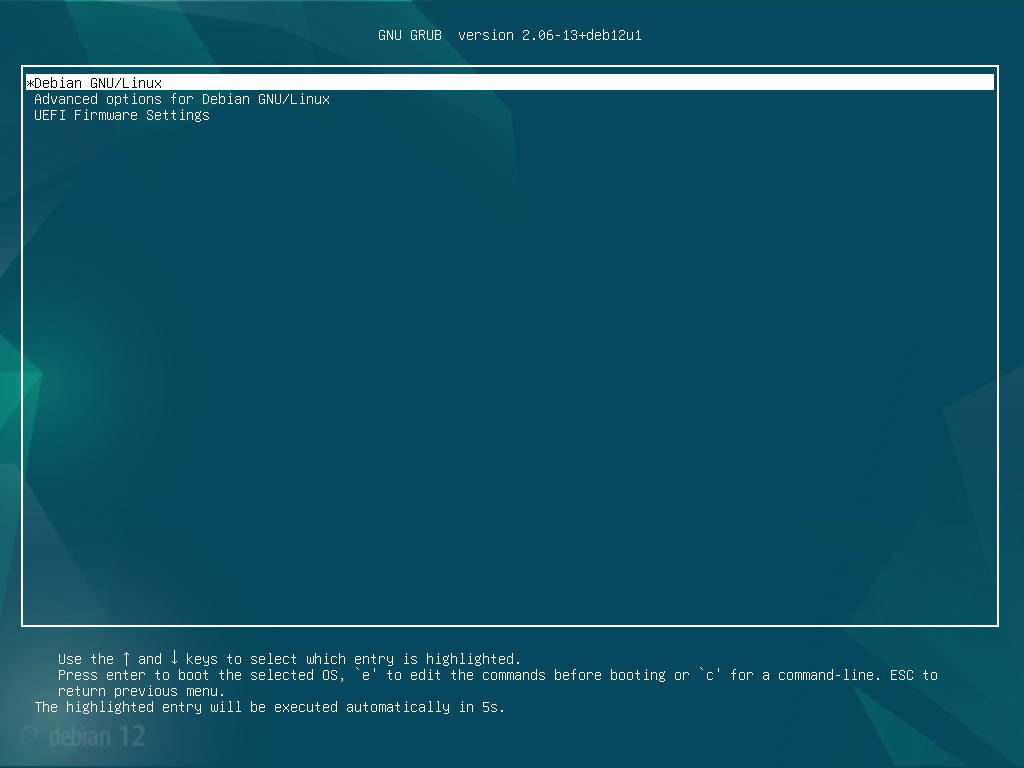

When a computer is first turned on, the bootloader loads the kernel into active memory and activates the first stages of the operating system. This can also enable you to have multiple operating systems options available on the same hardware. GRUB (or the GNU GRand Unified Boot) is the most common bootloader for Linux operating systems.

Core Systems

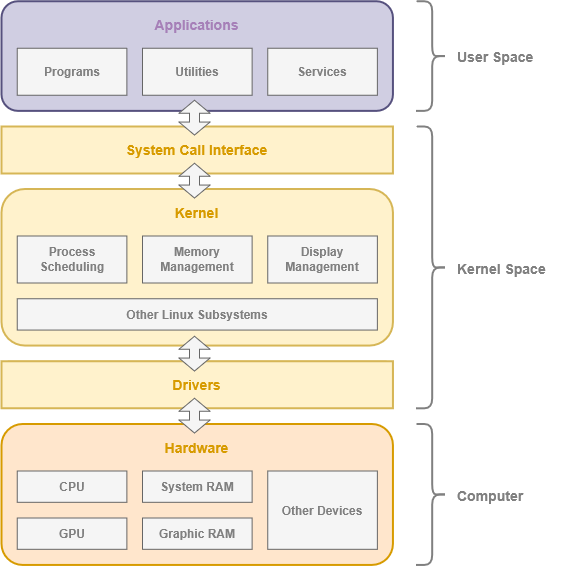

The kernel is always loaded into memory, facilitating communication between components like your processor, graphics card, and storage drives. The kernel uses device drivers installed on the operating system to control hardware inside the computer.

A kernel is the most essential and core part of something greater.

Visualization of Open-Source Projects in the Linux Kernel

Visualization of Open-Source Projects in the Linux Kernel

While the Linux kernel is perhaps the most well-known open-source kernel, it is far from the only option. Notable examples being FreeBSD, OpenBSD and NetBSD, as well as specialized kernels like GNU Hurd and Mach. Microsoft Windows and MacOS also use their own proprietary "closed-source" kernel for their operating systems.

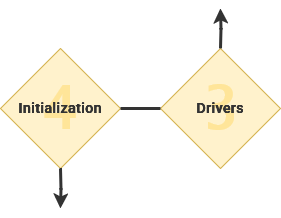

Drivers

The Kernel communicates using modular software known as drivers to control hardware components. There are a wide range of drivers available, ranging from proprietary drivers to open-source drivers that provide basic features to a diverse range of devices.

Initialize Subsystems

Once GRUB has loaded the kernel, the first program started by the operating system is called an init – or initialization – service. This integral program is the root of all other processes operating on the computer. This is the first program that operates within the 'user space' – or software that exists outside of the kernel.

For many Linux distributions, systemd has become standard. This software is responsible for managing all of the software that runs behind-the-scenes in an operating system. A crucial aspect is making sure that everything starts in the right order – such as only starting network-dependent services after an internet connection is established.

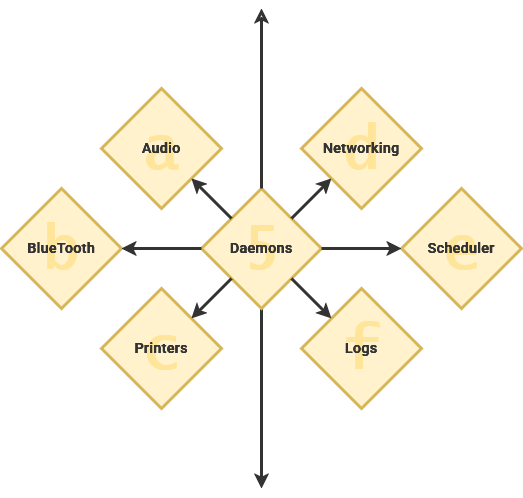

Daemons

Once the init program – often systemd – has loaded itself, it can start the other necessary processes. A daemon is a specific type of software program that runs in the background. These control system functions and are not something that you often need to interact with directly. Generally, these programs start during the boot process and continue running until the computer is shut down.

Many people equate the word "daemon" with the word "demon", implying some kind of satanic connection between UNIX and the underworld. This is an egregious misunderstanding. "Daemon" is actually a much older form of "demon"; daemons have no particular bias towards good or evil, but rather serve to help define a person's character or personality. The ancient Greeks' concept of a "personal daemon" was similar to the modern concept of a "guardian angel"—eudaemonia is the state of being helped or protected by a kindly spirit. As a rule, UNIX systems seem to be infested with both daemons and demons.

— Unix System Administration Handbook

These daemon services fill a range of roles and niche operations the perform tasks behind-the-scenes. Traditionally, they have a 'd' at the end of their name or otherwise denote that it's a daemon.

| Daemon | Description |

| syslogd | Handles error logs |

| sshd | Handles SSH connections |

| PulseAudio-d | Handles system audio |

| D-Bus-Daemon | Handles communication between applications |

| cups-daemon | Handles printer integration |

Interacting with a Computer

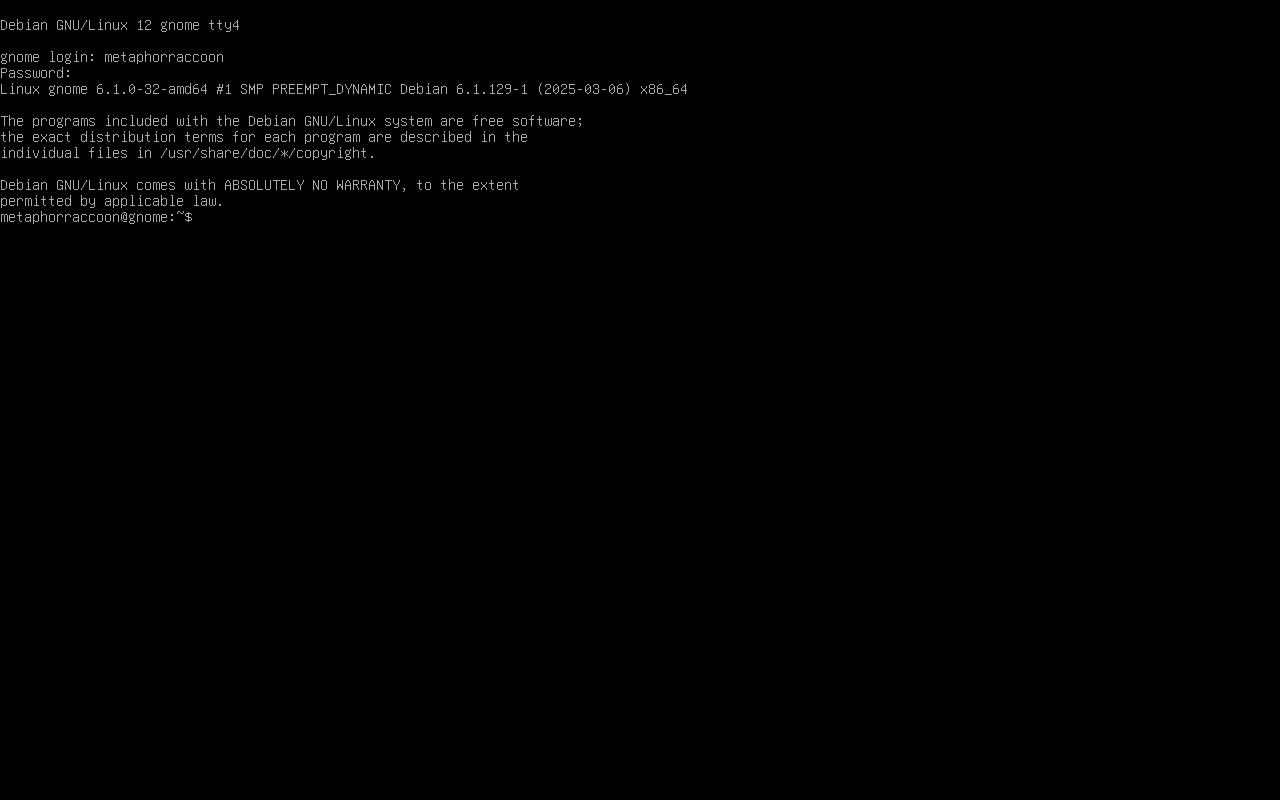

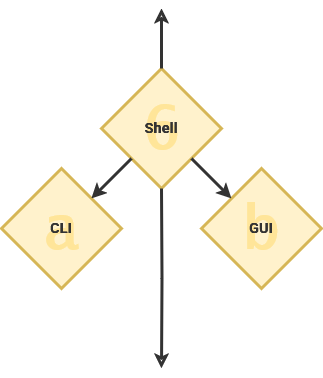

As part of the initialization program, the system loads the Shell – or user interface. This is the interactive element that evokes what we commonly consider to be the "operating system". The shell can be a command line or a graphical user interface.

It is named the "shell" because it is outermost layer surrounding the operating system.

Command Line

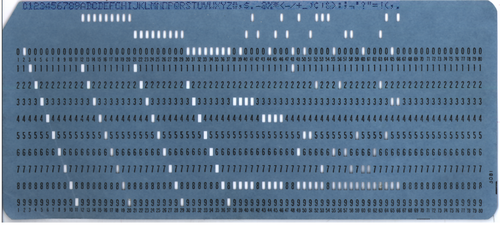

Before we had digital computers and operating systems, we had mechanical "analog" computers. These machines used a punch-card system. that read data and operational programs from a physical paper card with holes punched out.

|

|

Along with the rise of digital computers, we crafted the now ubiquitous keyboard from our experience with the typewriter. With this tool, command line interfaces first emerged in the 1970s as the primary means of interacting with a computer.

They provide powerful direct access to computer hardware and software. You accomplish this using the terminal to run programs that accept text-based input. Terminal command use a specific syntax to run programs with their necessary variables. The command line makes it easy to complete procedural tasks by writing shell scripts to automate tasks.

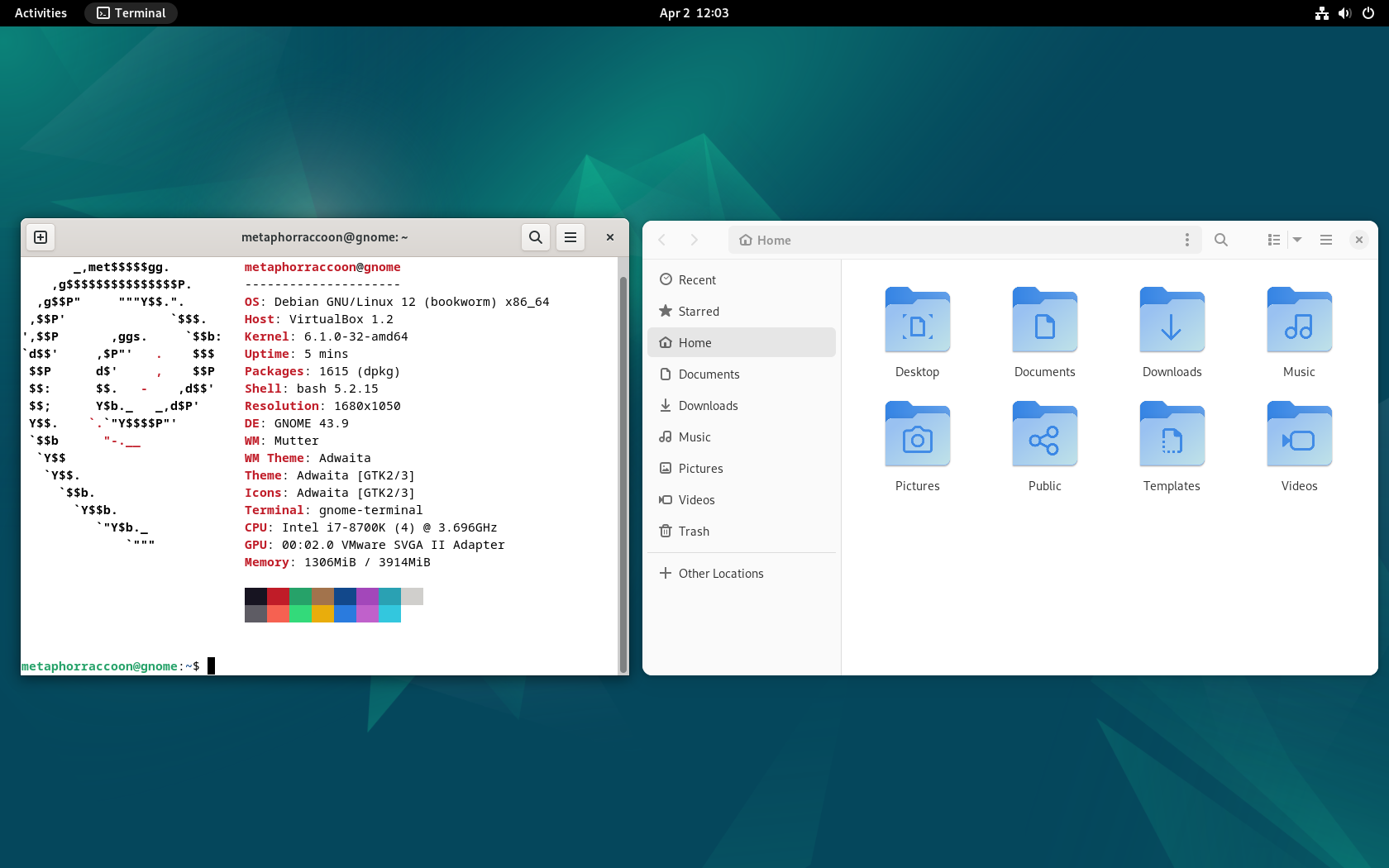

Graphical User Interface

Graphical user interfaces offer a visual desktop environment that allows you to interact with the operating system and applications. These interfaces use a display to view the "desktop", as well as a mouse and keyboard for users to interact with. GUIs – pronounced "gooey" – often come with a terminal emulator pre-installed, providing direct access to the command-line from your desktop.

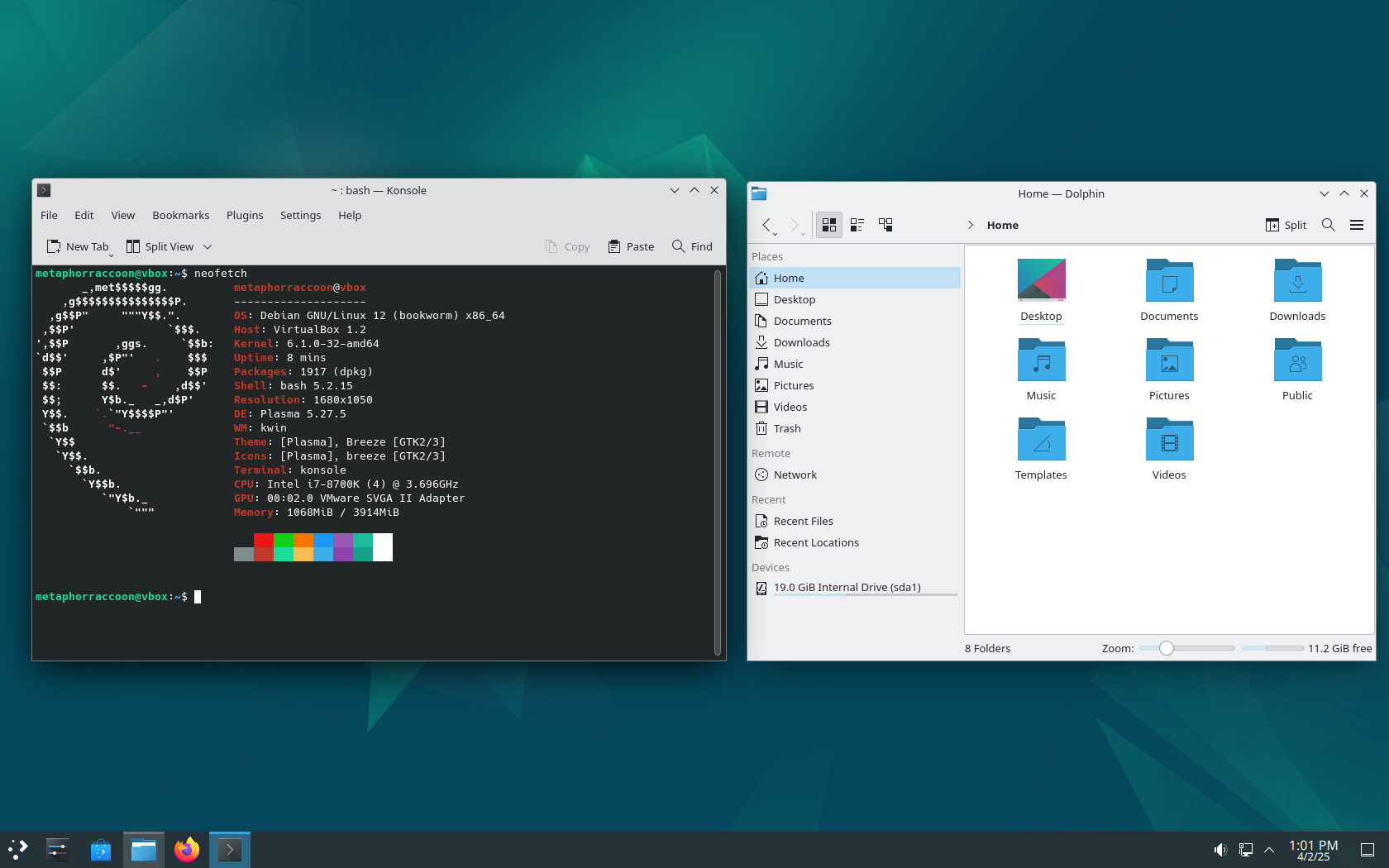

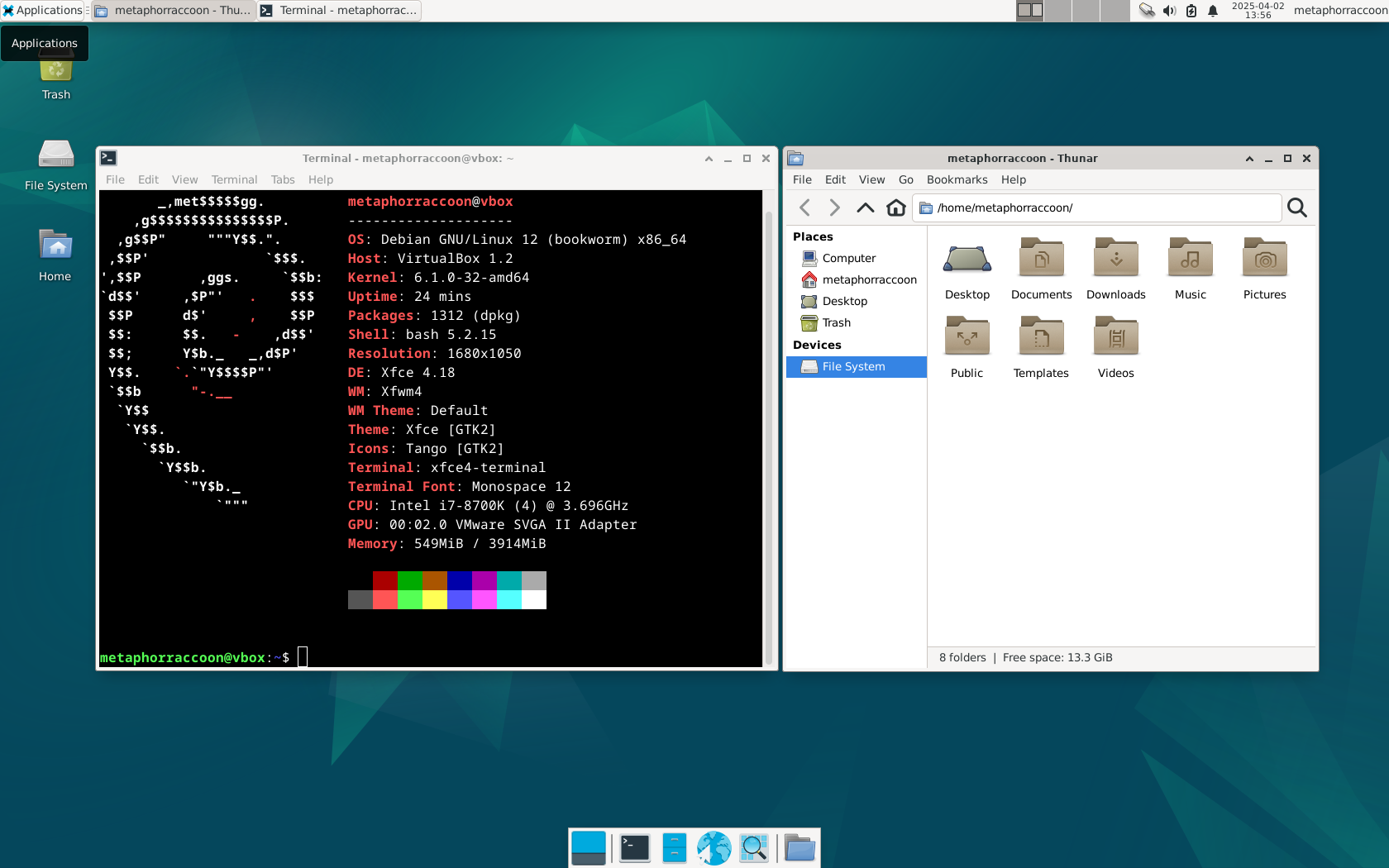

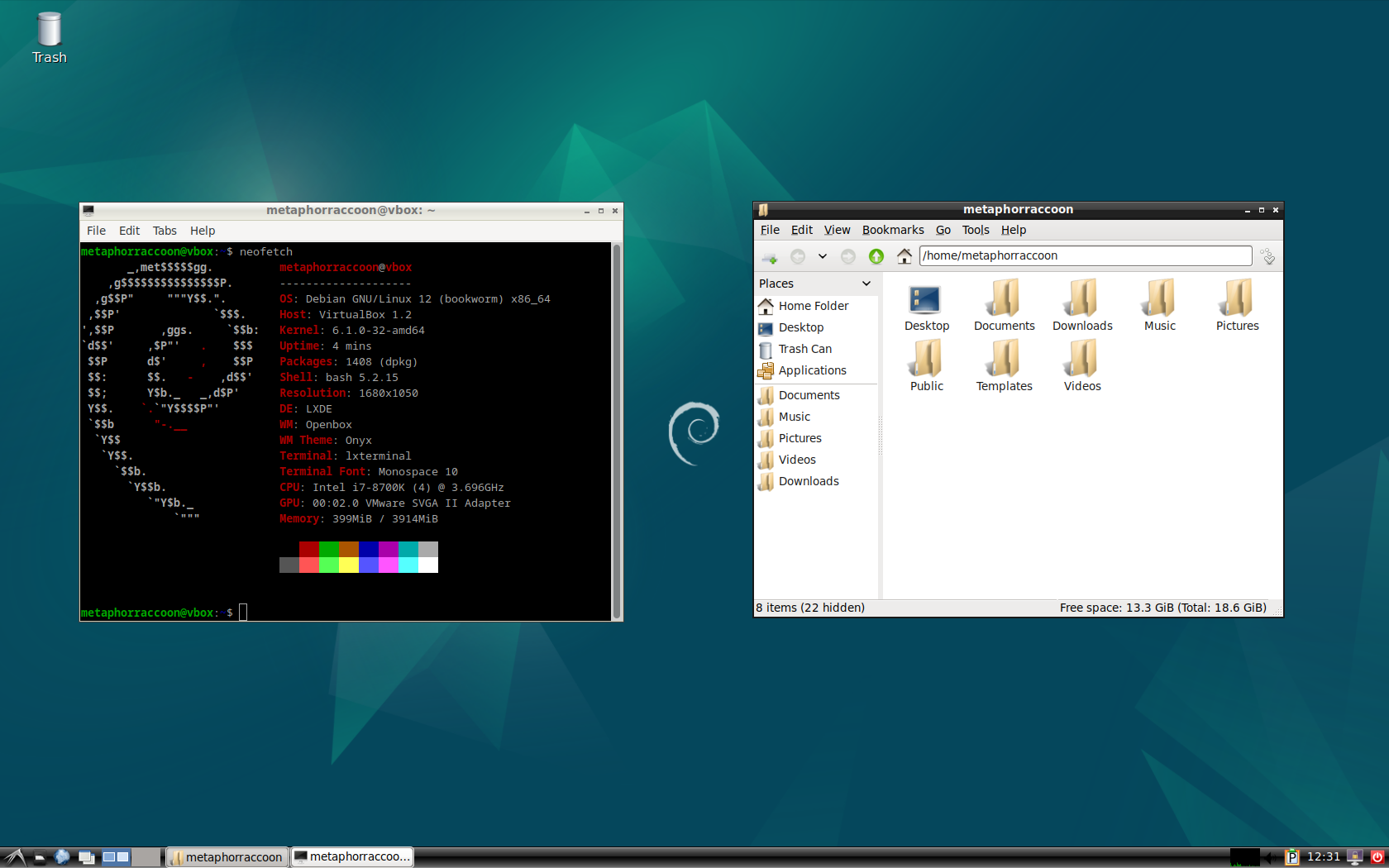

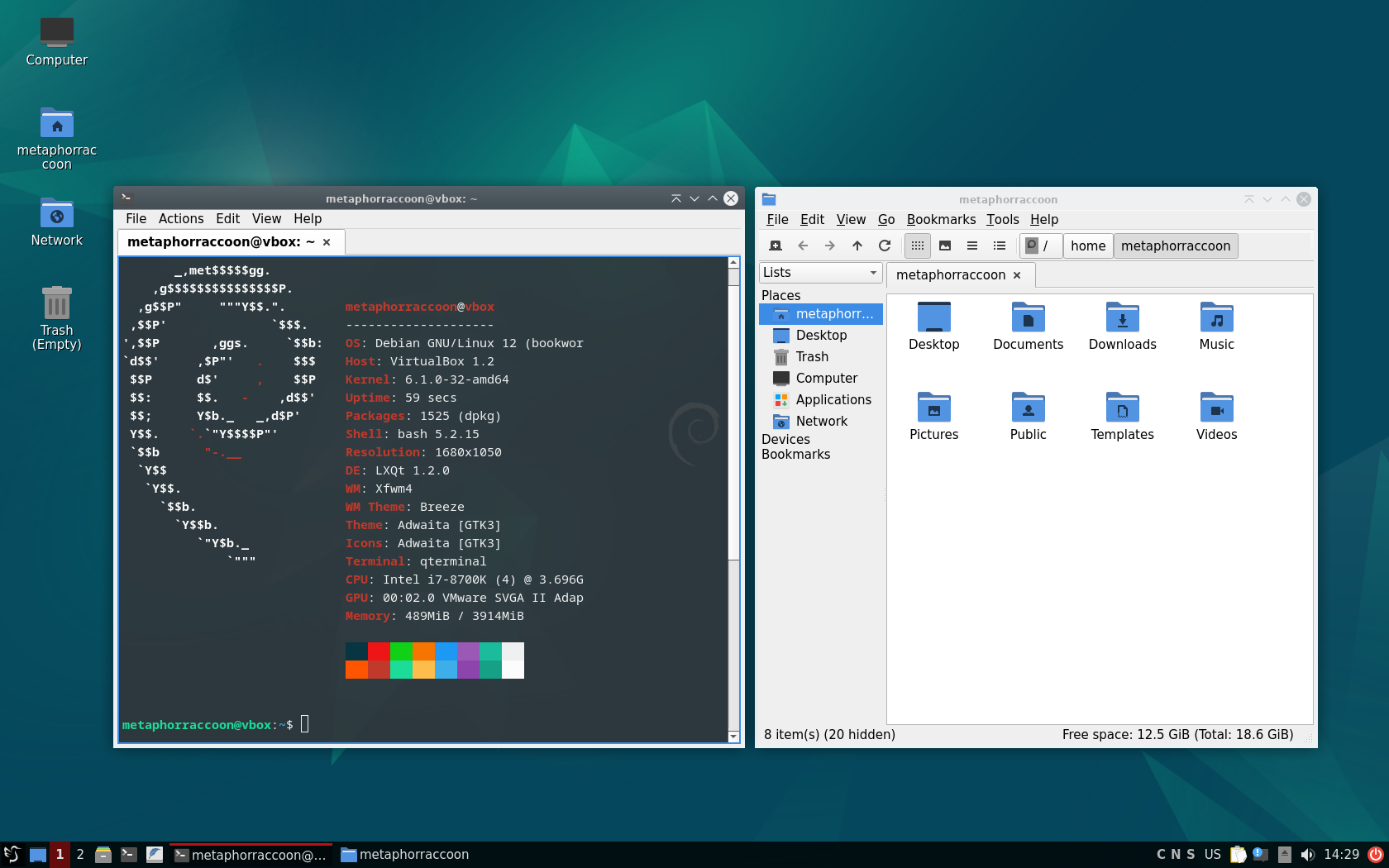

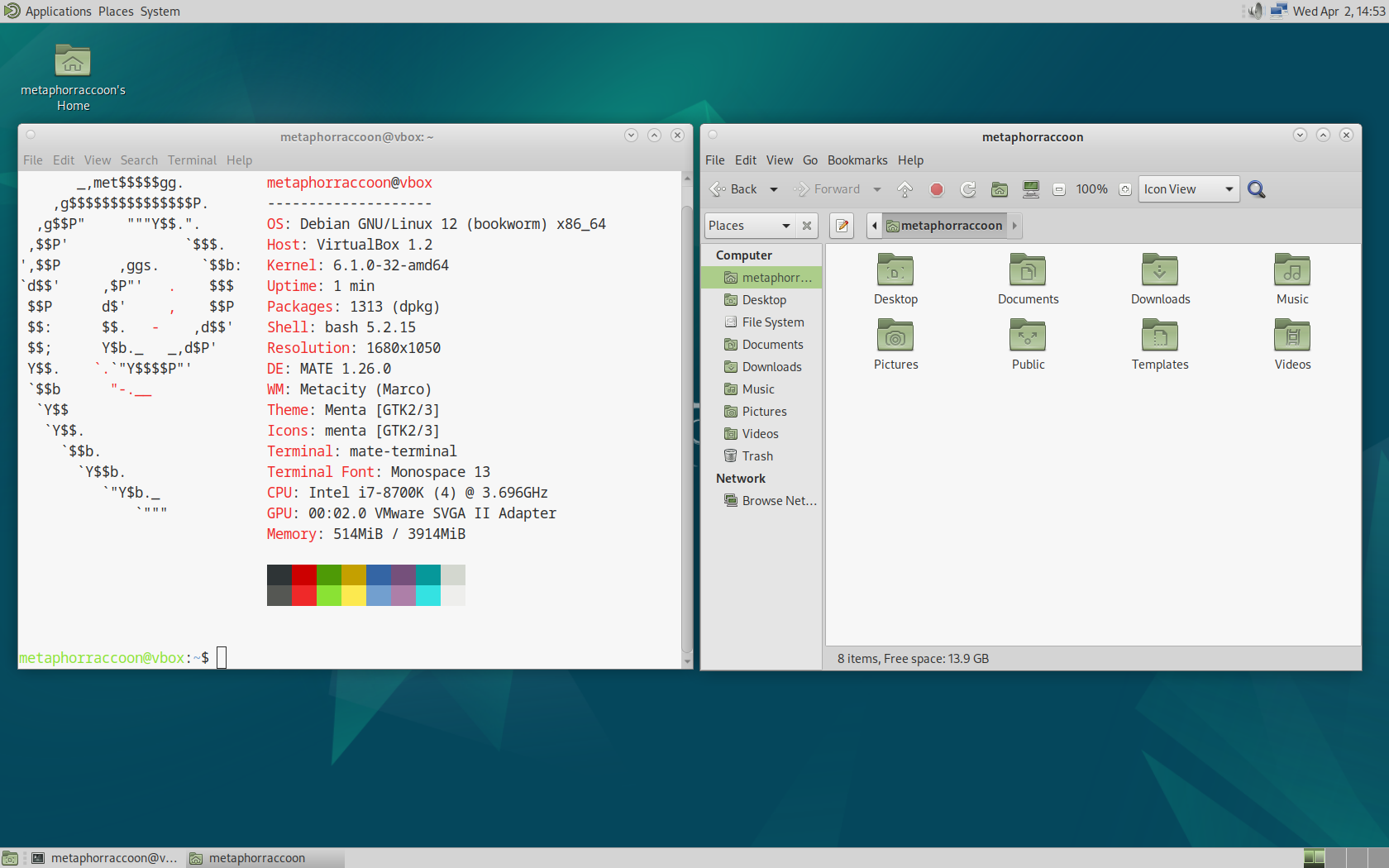

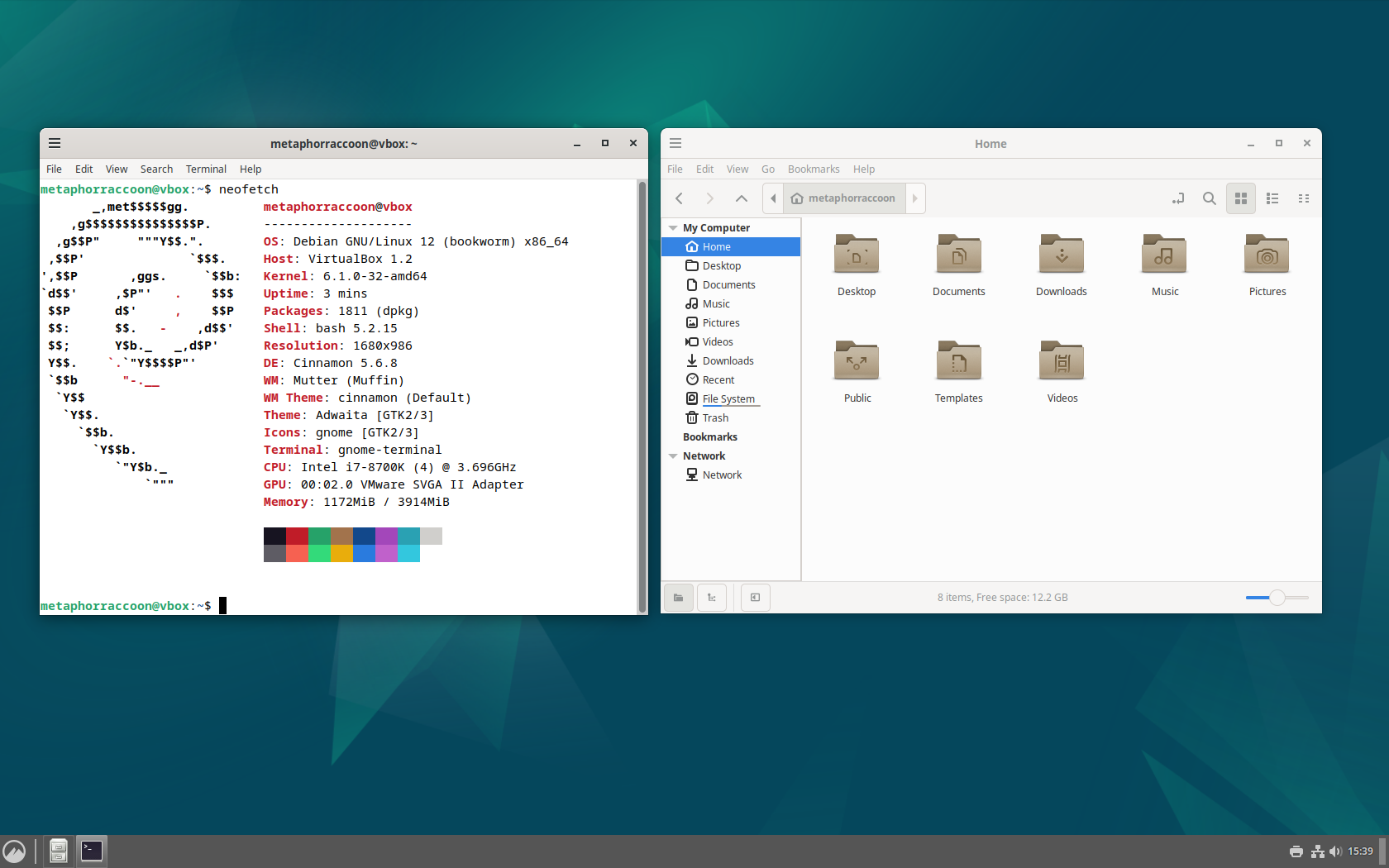

Debian uses GNOME by default, but other common options include:

|

|

|

|

The Desktop Environment is largely a personal preference and defines the overall feel of your user experience. This choice affects what applications are installed by default, but you are free to install any compatible applications. While installing Debian, you can choose your preference or even include more than one to switch on-the-fly.

Wrangling Data

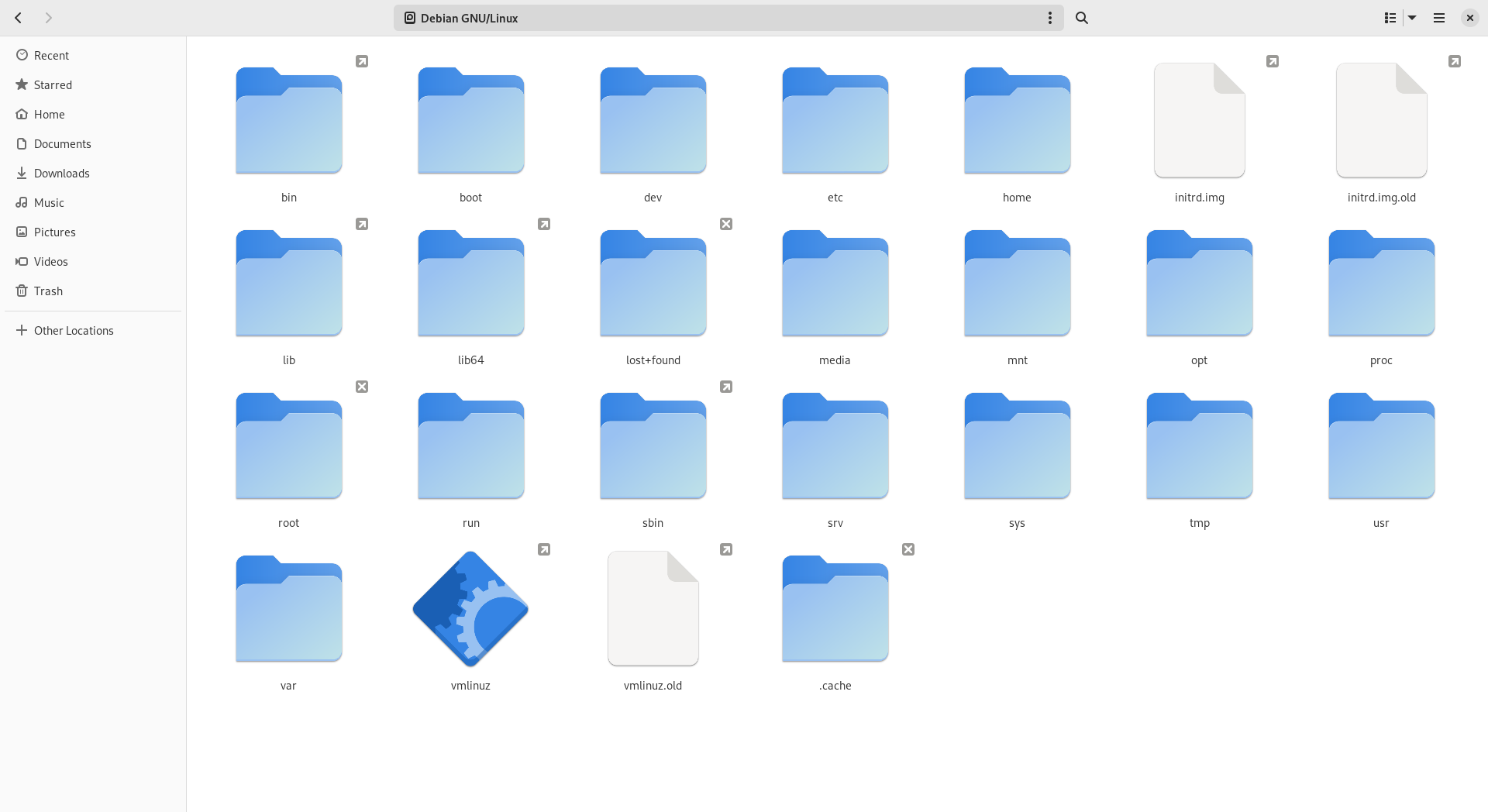

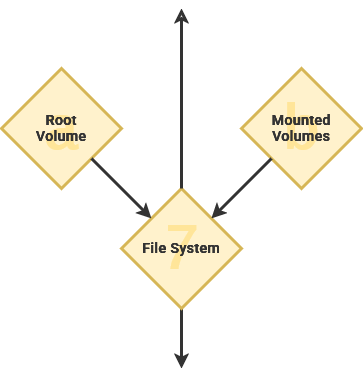

The Shell allows users to interact with the data stored on disk drives through a command line or graphic user interface. This is accomplished using a file system on your hard drive that governs file access and functional organizational structure.

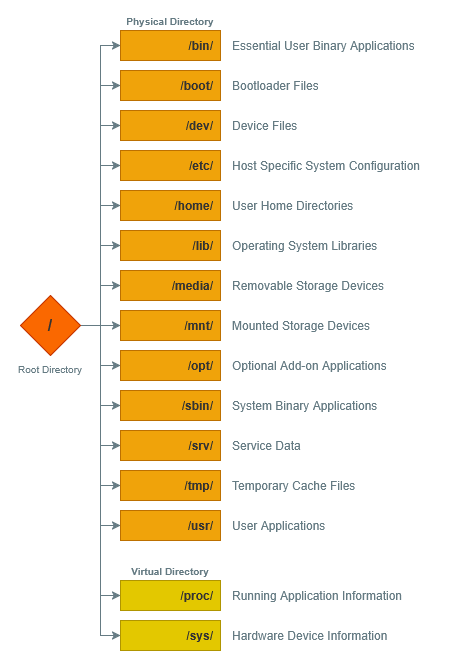

Files are expressed by their folder hierarchy – as a file path – starting with the root directory ("/"). As an example, personal user account data is stored within the home directory, or "/home".

Windows path use a backslash ("\") while Linux uses a forward slash ("/").

Linux has an "everything is a file" design philosophy. This functionally means that hardware devices, processes, and sockets are overlaid onto our root folder. As an example, the directory "/proc" doesn't actually exist on our storage drive. Instead, it is a virtual filesystem representing real-time information from the kernel and other processes.

Similarly, Docker creates a virtual file named "/var/run/docker.sock" that acts as a "socket" for communication between services. This is how services like Portainer can access and manage existing containers, as well as create new ones.

When a computer system has multiple storage drives, they are located as a subdirectory within "/mnt". This enables all of our storage drives to be accessible from the root "/" folder. As an example, we could mount an external USB hard disk drive to "/mnt/flashdrive".

The Windows operating system assigns a letter designation to each drive, effectively creating multiple root folders – such as "C:\" and "D:\".

Systematic Functions

Software – like a computer program – instructs a computer how to complete a task. While an operating system offers the general foundation and some general productivity tools, applications are what leverage computers for specific operations.

program hello

! This is a comment line; it is ignored by the compiler

print *, 'Hello, World!'

end program helloThis is a very basic snippet of the Fortan programming language created in 1957. The introduction of high-level programming languages – primarily based on the English language – allowed for more readable instructions. This helped to make coding much easier while also allowing software to be more portable across computer types.

Software code, written in a programming language like Fortran, is run through a compiler or interpreter to execute the instructions. Interpreted languages are run line-by-line from their written form – commonly known as a script. Compiled languages are broken down into architecture specific applications such as MacOS, Windows or Debian.

A Common Language

The System Call Interface allows the applications to access computer hardware without needing to communicate with specific hardware components. This abstracts underlying information, much like the delineation between hardware and software.

This is an example of an Application Programming Interface – or API – that allows software to communicate through a mutually agreed upon language. This is how a web browser can access a web camera connected though a USB cable, or a word processor can edit a file on your hard drive.

User Applications

These programs are intended for end-users to perform tasks that are not related to the general operation of the computer. Program, application, software – and even script – are often used interchangeably to refer to this type of code. This category includes – but is not limited to – office productivity suites, media players, web browsers and photo editors. Many modern operating systems provide storefronts to download both open-source and proprietary software to your computer.

Server Applications

While user applications are geared towards a person sitting in a chair in front of that computer, server software is intended for use by one or more computers and the people using them. When you visit most websites and web apps – such as diagrams.net – you are using your computer to access a server application.

The client—server model structures applications so that individual people or computers – known as clients – can retrieve information from a centeralized location – known as a server. Instead of installing and managing user applications on a personal computer, we can create a server application accessible by people on our network. When a server shares it's resources, it is providing a service.

The rise of cloud computing has led to server applications being provided to end-users over the World Wide Web. These are known as Software as a Service – such as Google Workspace or Microsoft Office Online. Generally these are accessed through a web browser, but many cloud services provide platform-specific apps – like Spotify for Windows.

Granular Security

https://www.dsbscience.com/freepubs/linuxoverwindows/node7.html

The Windows 9x tree is single user and went much futher than that in terms of lack of security. Since they were single user, the user was by definition 'administrator' and had full access to all system resources. Exploited vulnerabities therefore could not be contained to a non-priviledged userspace. Usernames were only used for network identification, not really as a true authentication mechanism. In addition, these systems were based on file systems (FAT16 and FAT32) that did not have file permissions.

Linux, a derivative of the Unix OS, was multi-user from its inception. The concept of privilege was built into the design from the start and rests on the maturity (two decades of real world testing) of Unix. Except for system tools, most applications in Linux do run properly when run as an 'ordinary' user; to gain privilege, one can ``su'' and provide the administrative (root) password. File systems provide per-file permissions for 'user,' 'group' and 'other.' It is easy to configure Linux so that default file permissions are quite restrictive, forcing the administrator to willfully 'open' the security where needed.

The persistence of the Heartbleed security bug in a critical piece of code for two years has been considered as a refutation of Raymond's dictum.[6][7][8][9] Larry Seltzer suspects that the availability of source code may cause some developers and researchers to perform less extensive tests than they would with closed source software, making it easier for bugs to remain.[9] In 2015, the Linux Foundation's executive director Jim Zemlin argued that the complexity of modern software has increased to such levels that specific resource allocation is desirable to improve its security. Regarding some of 2014's largest global open source software vulnerabilities, he says, "In these cases, the eyeballs weren't really looking".[8] Large scale experiments or peer-reviewed surveys to test how well the mantra holds in practice have not been performed.[10]

Empirical support of the validity of Linus's law[11] was obtained by comparing popular and unpopular projects of the same organization. Popular projects are projects with the top 5% of GitHub stars (7,481 stars or more). Bug identification was measured using the corrective commit probability, the ratio of commits determined to be related to fixing bugs. The analysis showed that popular projects had a higher ratio of bug fixes (e.g., Google's popular projects had a 27% higher bug fix rate than Google's less popular projects). Since it is unlikely that Google lowered its code quality standards in more popular projects, this is an indication of increased bug detection efficiency in popular projects.