What is the Cloud?

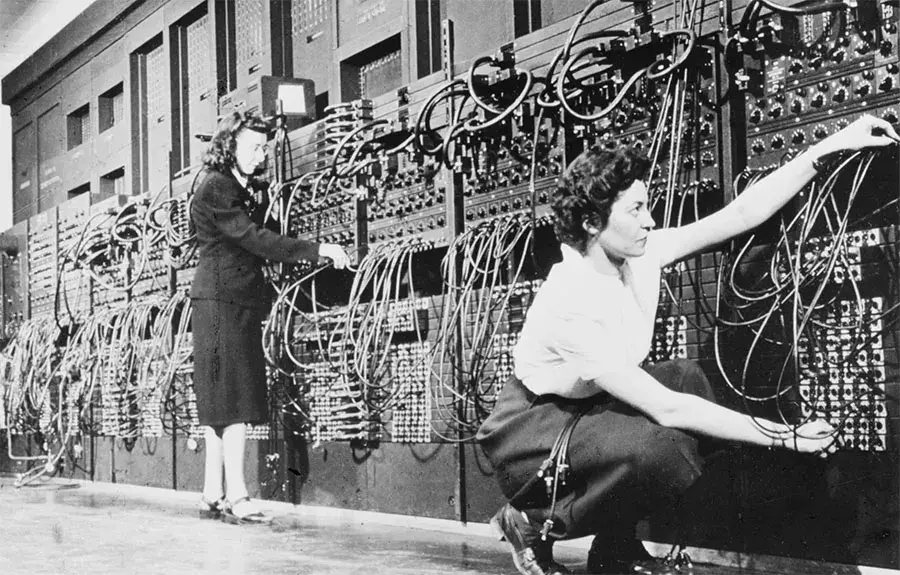

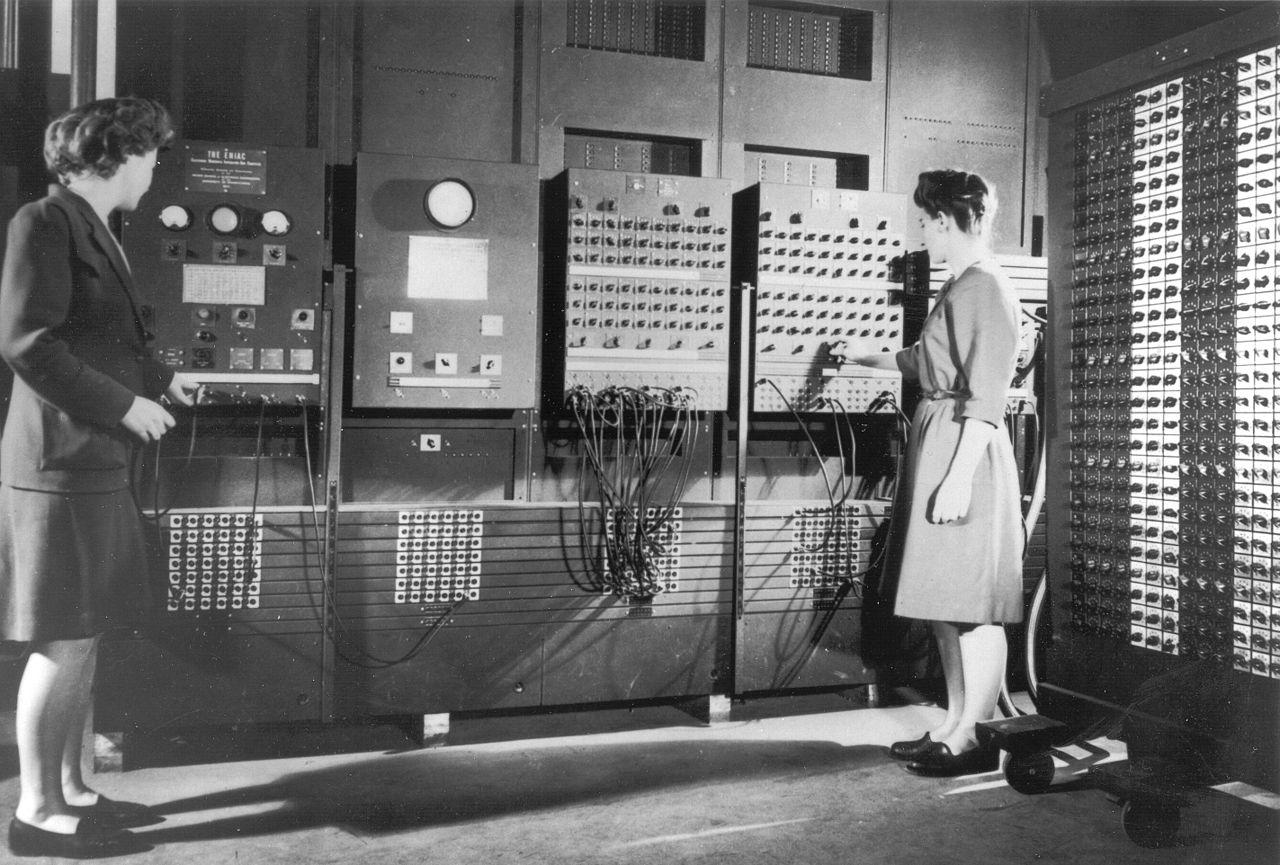

The first "modern" general-purpose computers, ENIAC and Colossus, were created in the 1940s. By the 1960s, computers were becoming more commonplace at larger institutions, but were still largely inaccessible to many professionals and researchers, let alone consumers.

|

|

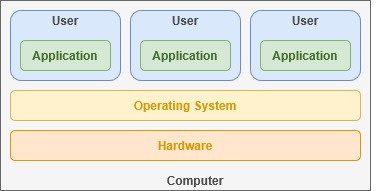

Time sharing became a technique that allocated computer resources to multiple users, allow for true multi-tasking for the first time. Instead of running programs in order, each user could run their own program and the server would quickly cycle through each user's task, performing a little bit of each at a time.

This gave the illusion that each "terminal" was a personal computer running only their tasks, when in reality it was acting as a portal to a shared local computer. Before the internet was a concept, this marks the start of our exploration into the concept of the modern "cloud".

|

|

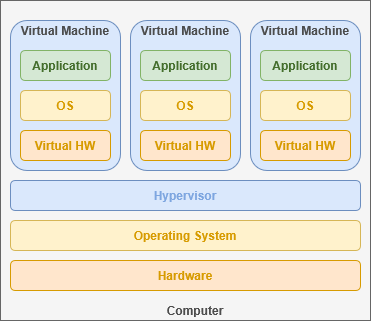

ARPANET, created in 1969, marks the creation of the long-distance Internet and implemented the same TCP/IP standards we use to this day. At the same time, IBM's CP-40 expanded upon time sharing to create virtualization – or dividing a single computer's hardware resources into multiple software environments. This allowed the creation of multiple secure and isolated server environments within a single physical hardware system.

By 1991, the modern internet as we know it was released to a world-wide consumer base. By paying an internet service provider, anyone could connect to the world wide web. Before the turn of the century the first theory of cloud computing is defined, creating a foundation for easily scalable server infrastructure.

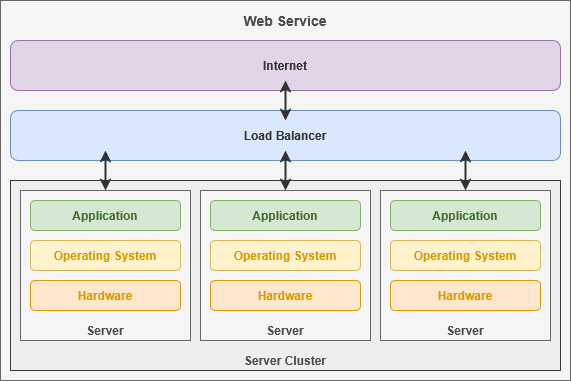

This technology allows independent hardware systems to be linked together over a network and share their resources towards supporting a unified service. Distributed computing enabled websites to support a hundred thousand users by balancing the work load across server clusters instead trying to juggle everything on one server.

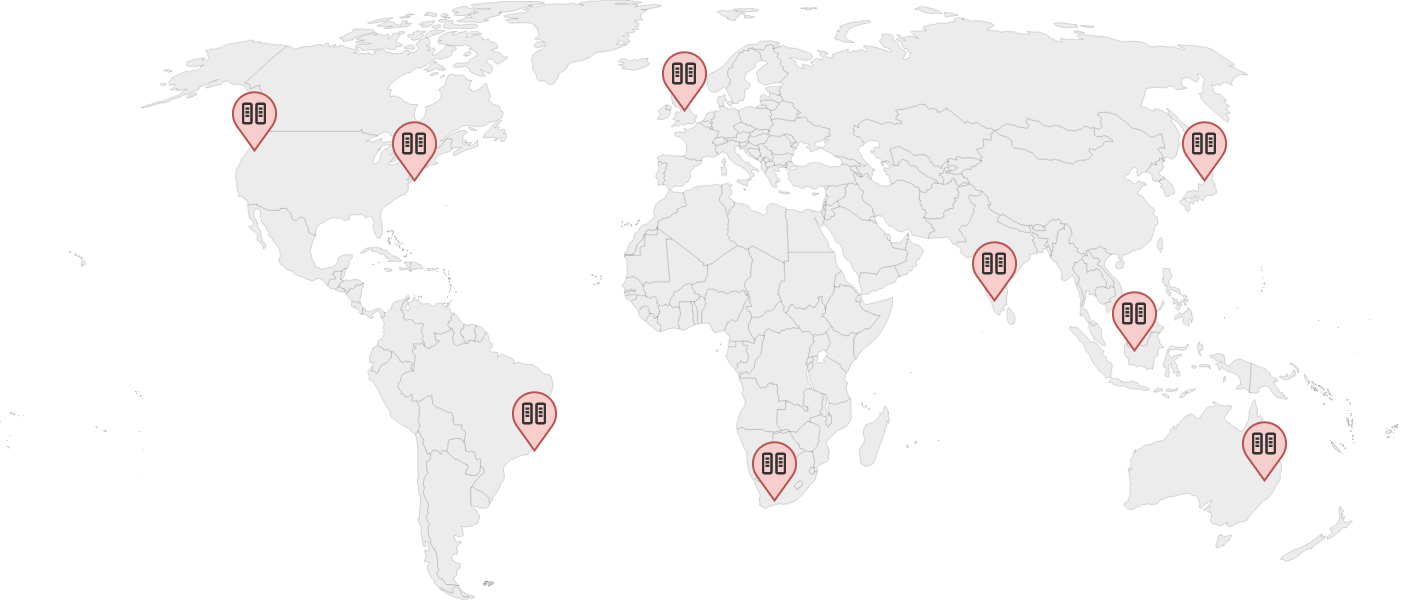

This allowed individual data centers to be spread across the globe. Whenever a service was accessed over the internet, the user could be geo-located and directed to the server that was closest to them. This provided a better connection quality, split up the workload and created more space for physical data storage.

By the early 2000s, technology companies had invested heavily into this infrastructure. This enabled businesses to forego hosting their own internal technology infrastructure within their building space and instead rely on an external contracted service.

Google and Apple both released services targeting consumers, offering access to storage space and and cloud-based tools. Amazon Web Services soon after introduced their "cloud" computing and storage services wherein businesses could rent server resources and storage space. Dropbox was unveiled in 2007, offering 2GB of free cloud storage to everyone, by heavily utilitizing AWS cloud services. This also marks an ascent of hosted open-source software services like WordPress – who currently power a sizeable portion of internet websites and control a commandeering majority of the CMS industry.

Contemporary cloud computing combines virtualization, distributed computing, and internet connections to build vast server farm infrastructures around the globe. By dividing up server clusters into numerous isolated software environments, data centers essentially create digital apartments for multiple tenants.

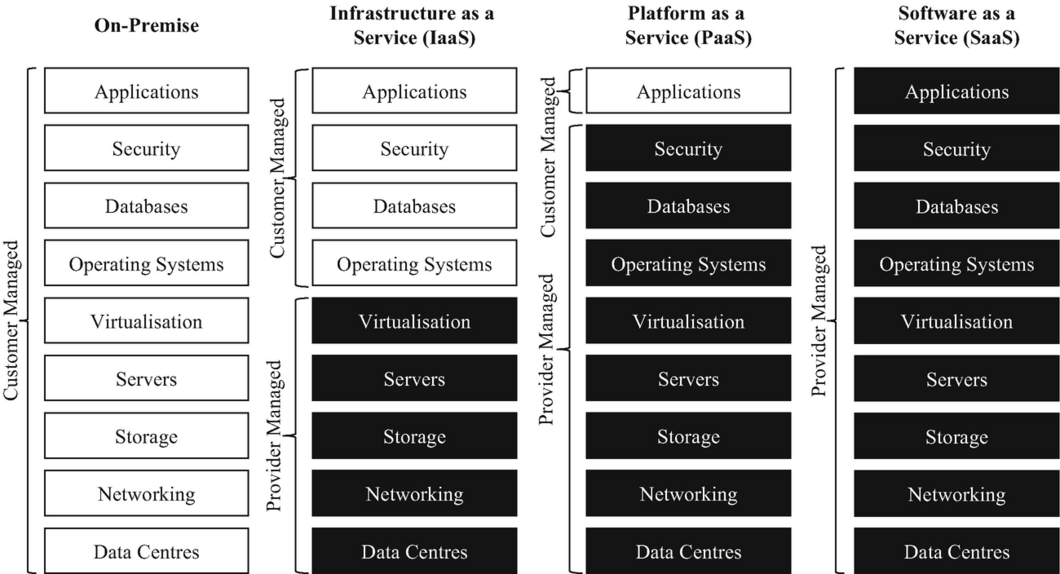

This infrastructure has led to a standardized "cloud computing" service model focused on providing a spectrum of granularity in regards to what is managed by the client versus the cloud computing service provider. This can has led to a great deal of uncertainty regarding data privacy, unauthorized access and legal compliance – with digital data outside the physical control of a company, how could they ensure their customers digital security?

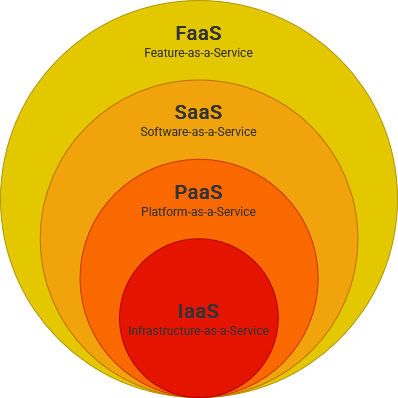

On one end, the client is in complete control over how they utilize their rented hardware while the other occurs completely behind-the-scenes:

|

stylus_laser_pointeR |

This |

| Cable |

Fast |

| Terminal |

Available |

| Function |

This |

- Infrastructure-as-a-Service is the practice of renting maintained computer systems to corporations, allowing them to use it however they see fit.

- Platform-as-a-Service is an extension wherein corporations can rent access to a specific software deployment platform like Docker.

- Software-as-a-Service connect corporations and consumers to applications that are typically web-based, such as office and file-hosting services.

- Function-as-a-Service allow singular functions to be completed, typically paid per usage, such as image or data processing.

While these technologies were groundbreaking for creating global internet platforms, they have had many impacts on privacy and technology development. You may lose access to your data at any point because service provider decides they don't want to host your content. With its current trajectory, cloud computing threatens to completely monopolize and privatize the very infrastructure that powers the Internet. More than 85% of global business are expected to adopt a "cloud-first" approach by 2025.

Within the modern digital world, data centers power much of the internet. By contracting out online services, corporations lose physical ownership over their data and shirk their responsibilities to stewardship towards your digital data.

When self-hosting your own cloud server, you are acting as the infrastructure, platform and software provider. Federation is nascent concept enabling servers to create shared services across independently owned and operated servers, allowing you to maintain data control.