What is the Cloud?

Computers are machines that can automatically process data based on defined rules – like logic and arithmetic. Humans have long explored purpose-built "analog" machines that perform specific tasks, such as the Antikythera mechanism from 200 BCE for calculating the position of stars and planets.

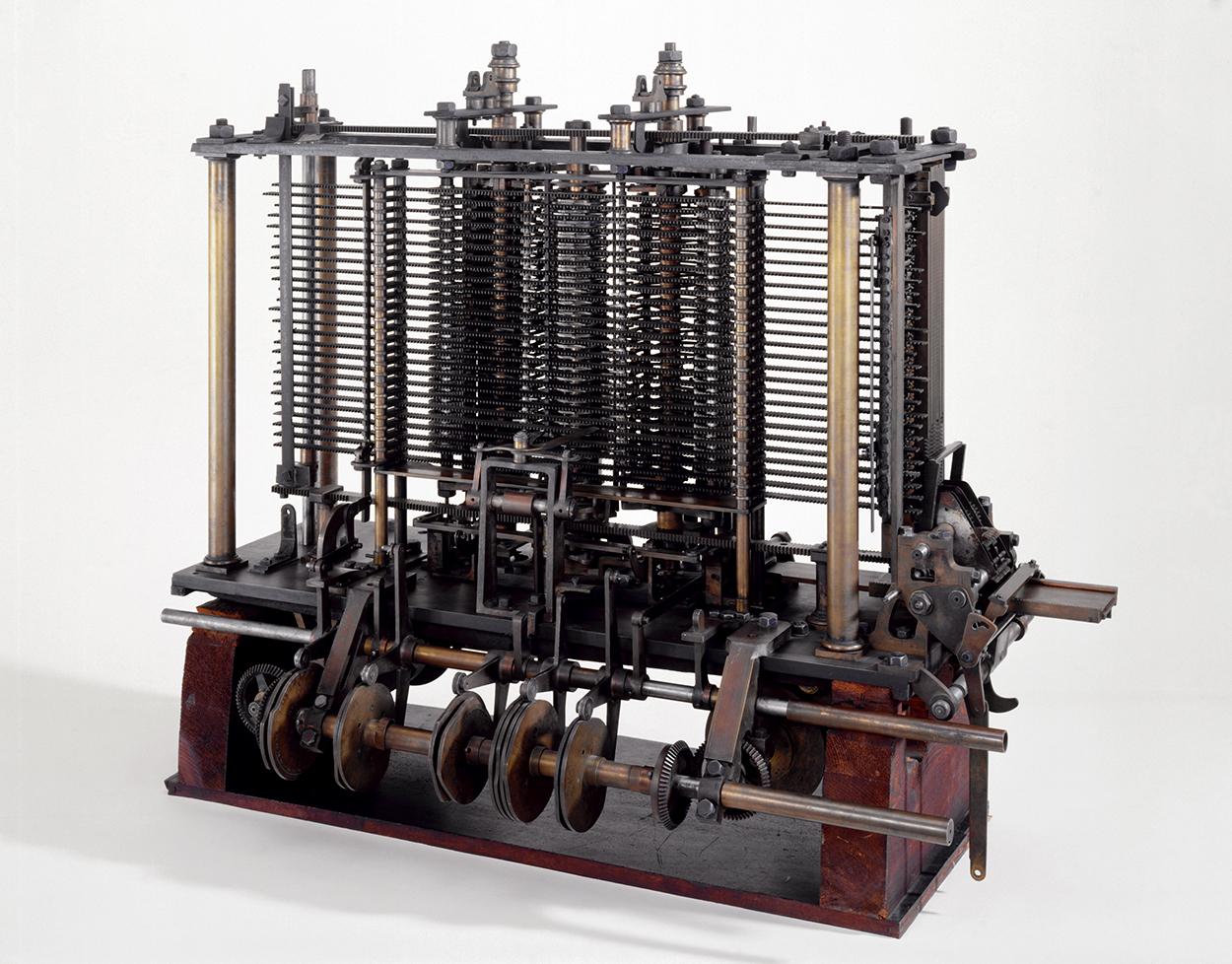

By the early 1900s, the programmable computer theorized by Charles Babbage and Ada Lovelace was finally demonstrated. While analog computers could reliably perform a pre-defined task, the Analytical Engine was the first to be "Turing-complete".

This new class of computers was capable of being programmed to perform specific tasks – like multiplication or conditional statements. This not only made computers more versatile, but ensured similar systems could share their programming.

Digital Systems

These first computers bear little resemblance to the discrete personal computers we use today. Analog systems used physical properties to perform their computations – such as connecting a circuit to turn on a warning light when the temperature within the room exceeds a certain threshold.

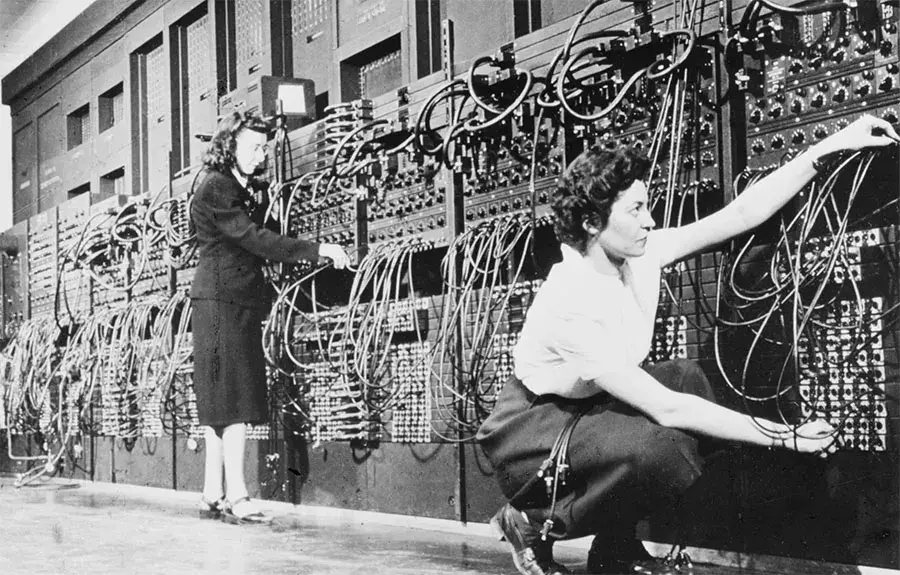

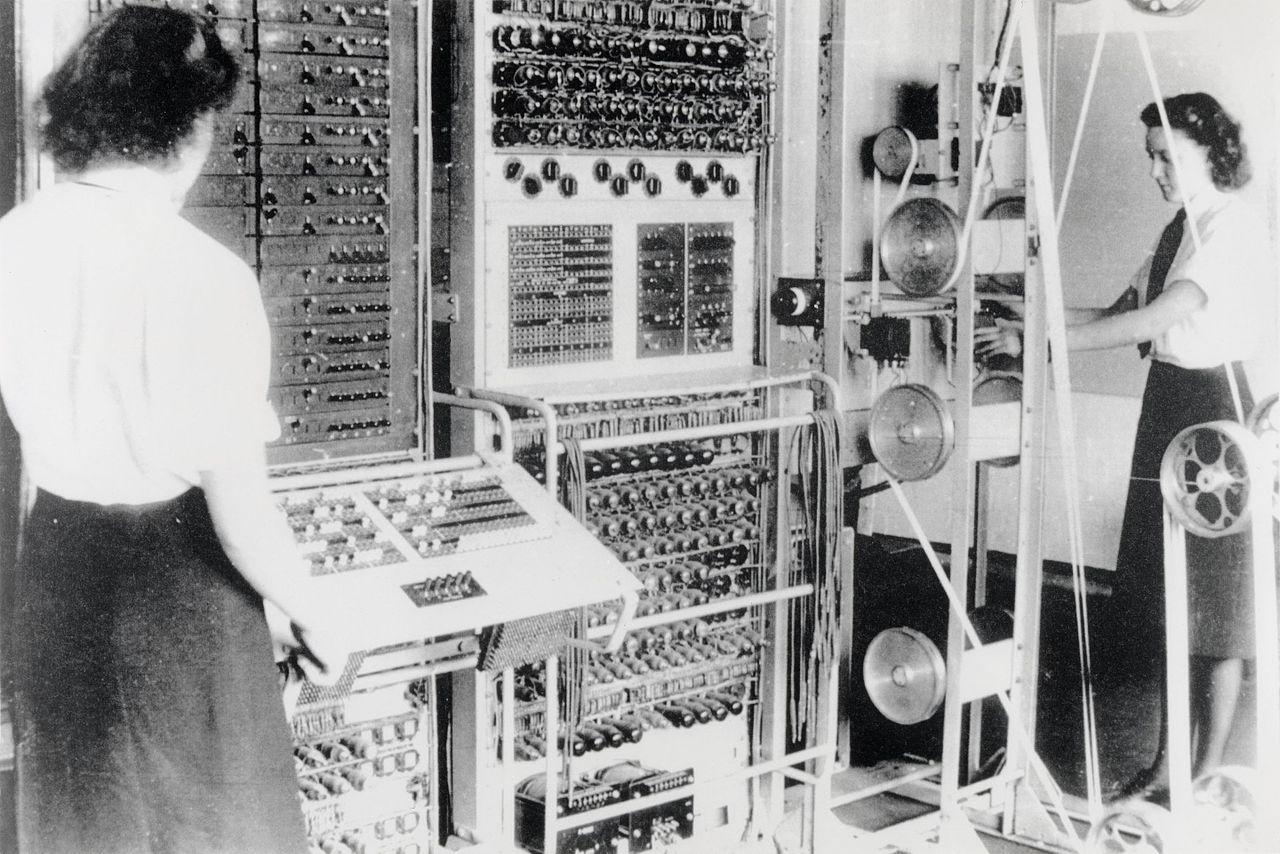

The first "digital" general-purpose computers were created in the early–mid 20th century. These systems transmit and process electrical signals to achieve the desired outcome. Colossus and ENIAC were both digital and programmable – meaning that the hardware could be instructed to perform new tasks even after the computer had been built.

Women were the first "computers" – tasked with maintaining the programming necessary to operate the first general-purpose digital computer systems.

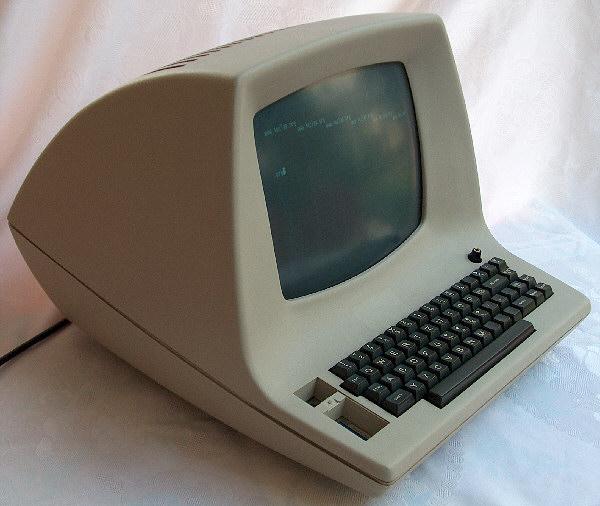

By the 1960s, digital computers were becoming more commonplace at large institutions, but were still largely inaccessible to many professionals and researchers, let alone everyday consumers. While hardware advances allowed computers to perform more complex tasks, software improved how many tasks could be performed at once.

Local Sharing

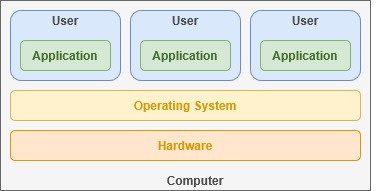

Time sharing became a technique that allocated computer resources to multiple users, enabling true multi-tasking for the first time. In the past, programs ran in sequence and completely finished a single task before moving onto the next. Now, each user could run their own program and the computer would quickly cycle through each user's task, performing a little bit of each at a time.

People could connect to this shared computer system through a "terminal" – or a keyboard and monitor that transmitted data back and forth over a physical cable. This maintained the illusion of a personal computer that ran only their task when, in reality, it was a shared computer. Before the internet was even invented, we had begun our exploration into the concept of the modern "cloud".

Time sharing was expanded upon to create virtualization – or dividing a single computer into multiple virtual computers. This allowed the creation of isolated enclaves where each user – whether a person or corporation – could securely run their own programs without worrying about sharing resources.

Interconnection

In 1969, ARPANET (Advanced Research Projects Agency Network) marked the birth of the long-distance Internet. During this time, the foundations were laid for the same "TCP/IP" standards we still use to this day. Using these new tools, researchers (and their computers) could share their resources across vast distances.

By 1991, the World Wide Web as we know it was released to global consumers. With a subscription to an internet service provider, anyone could connect to the World Wide Web. Before the turn of the century, the first theory of cloud computing defined a foundation for scalable servers.

This enabled independent computers to be linked together over a network and share their resources towards supporting a unified service. Distributed computing enabled websites to support a hundred thousand users by balancing the work load across multiple computers instead trying to juggle everyone using one system.

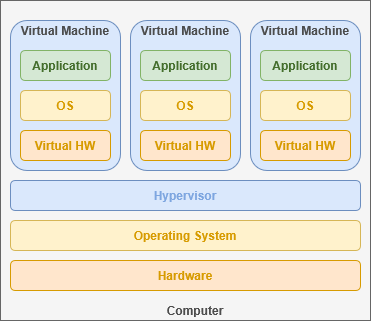

Whenever a service was accessed over the internet, the user could be geo-located and directed to the server that was closest to them. This provided a better connection, split the workload and created more space for data storage. As a result, data centers quickly proliferated across the globe.

Data Centers

By the early 2000s, technology companies had invested heavily into data center infrastructure. Through this model, businesses could forego hosting their own server computers and instead rely on the infrastructure maintained by someone else.

These distributed server farms became increasingly integrated into our every day lives – personally and professionally. Google and Apple both released services targeting corporations and consumers, offering access to cloud-based tools. Amazon introduced "cloud" computing and storage services, enabling businesses to rent computer resources – like processing power or storage space.

|

ARSAT Data Center |

Google Data Center |

Take a look at the data centers located near you – there's more than you'd think!

Contemporary cloud computing combines virtualization, distributed computing, and secure internet connections to build vast server farm infrastructures around the globe. By dividing up server clusters into isolated software environments, data centers create the digital equivalent of an apartment building housing multiple tenants.

When contracting out digital infrastructure services, corporations may lose physical ownership of data because it can be nearly impossible to keep track. Under this paradigm, many the potential to shirk their responsibilities for digital stewardship – or how they keep data safe, secure and up-to-date.

Everything as a Service

DataThe decentralized infrastructure of data centers havehas fomented great deal ofconsiderable uncertainty about data privacy, unauthorized access and legal compliance:compliance. withWhen digital data exists outside the physical control of a company, howcan they reliably ensure security? On the other hand, could theyleaving ensuredata theirstorage digitalup to industry professionals improve its baseline security?

Data Forcenters, for better or worse, dataare centersthe dominant paradigm and currently power much of the internetinternet. andThey haveare becomenow a driving force behind technologicalthe expansion.proliferation and expansion of technologies around the globe. Any digital service can be provided as a paid subscription using this pervasive business practice.

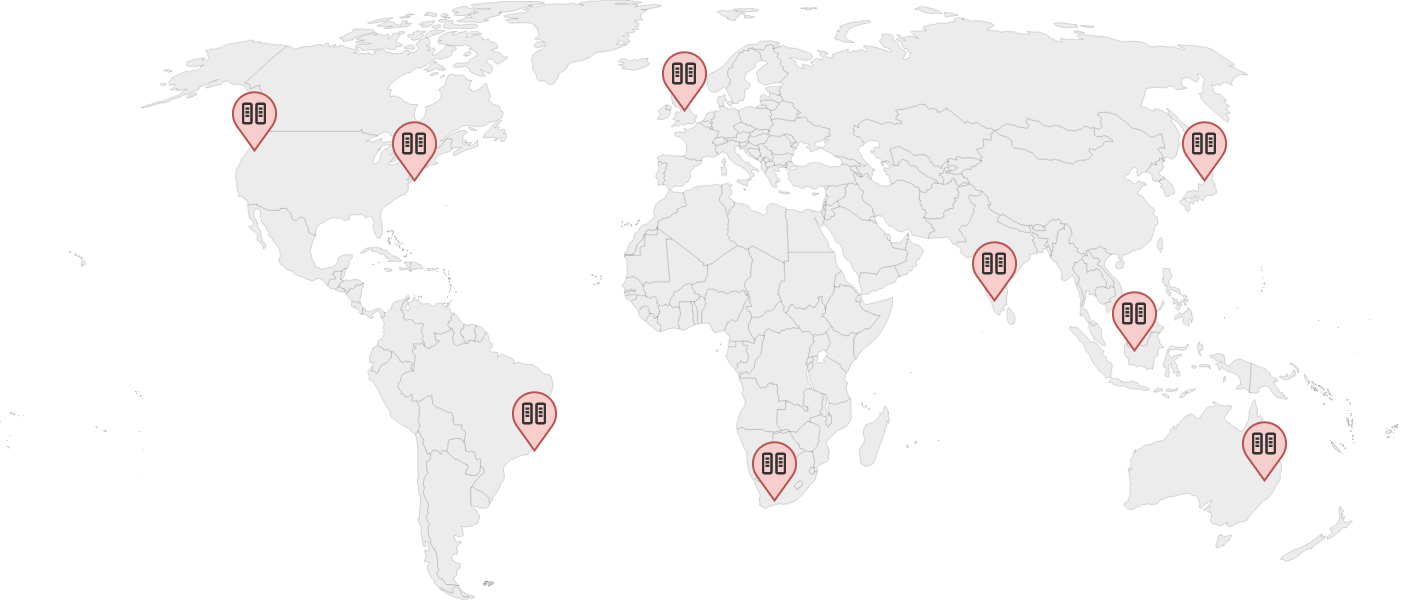

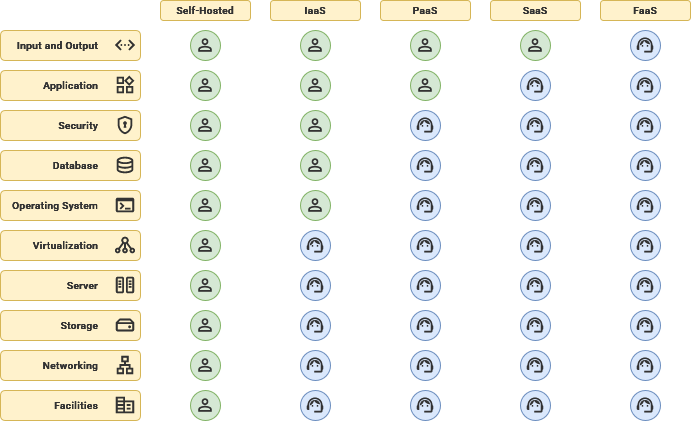

This vast infrastructure has led to a standardized "cloud computing" service model focused on providing a spectrumgranular spectrum. This dictates how much of granularitythe in regards to whatservice is managed by the client versus the cloud computing service provide.provider:

|

Dns |

The practice of a provider renting

|

| Code_blocks |

Subscription to service

|

| Terminal |

Connects

|

| Function |

|

On one end, the client is inmaintains complete control over how they can utilize their rented hardwarehardware. while On the otherother, everything occurs completely behind-the-scenes.scenes without the client ever being aware.

While these technologies were groundbreaking for creating global internet platforms, they have had many impacts on privacy and technology development. You may lose access to your data at any point because service provider decides they don't want to host your content.

With its current trajectory, cloud computing threatens to completely monopolize and privatize the very infrastructure that powers the Internet. More than 85% of global business are expected to adopt a "cloud-first" approach by 2025.

Self-Hosting

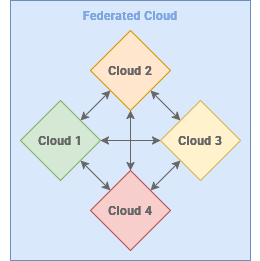

Federation is nascent computing concept that enables servers to share services that can operate across multiple autonomous servers. By decentralizing the ownership of a software, we can ensure no one entity can unilaterally control the service. The Fediverse – or a collection of social networking services – all operate using a standardized protocol that allow them to communicate while maintaining individuality.

By utilizing these same cloud computing technologies, anyone has the ability to host their own services – even from home. Linux, Docker and all of the necessary software are open-source, available for free to anyone. When self-hosting your own cloud server, you have the power – and responsibility – to act as an infrastructure, platform and software provider.